Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

An Open-Ended Embodied Agent with Large Language Models

MineDojo/Voyager

Folders and files, repository files navigation, voyager: an open-ended embodied agent with large language models.

[Website] [Arxiv] [PDF] [Tweet]

We introduce Voyager, the first LLM-powered embodied lifelong learning agent in Minecraft that continuously explores the world, acquires diverse skills, and makes novel discoveries without human intervention. Voyager consists of three key components: 1) an automatic curriculum that maximizes exploration, 2) an ever-growing skill library of executable code for storing and retrieving complex behaviors, and 3) a new iterative prompting mechanism that incorporates environment feedback, execution errors, and self-verification for program improvement. Voyager interacts with GPT-4 via blackbox queries, which bypasses the need for model parameter fine-tuning. The skills developed by Voyager are temporally extended, interpretable, and compositional, which compounds the agent’s abilities rapidly and alleviates catastrophic forgetting. Empirically, Voyager shows strong in-context lifelong learning capability and exhibits exceptional proficiency in playing Minecraft. It obtains 3.3× more unique items, travels 2.3× longer distances, and unlocks key tech tree milestones up to 15.3× faster than prior SOTA. Voyager is able to utilize the learned skill library in a new Minecraft world to solve novel tasks from scratch, while other techniques struggle to generalize.

In this repo, we provide Voyager code. This codebase is under MIT License .

Installation

Voyager requires Python ≥ 3.9 and Node.js ≥ 16.13.0. We have tested on Ubuntu 20.04, Windows 11, and macOS. You need to follow the instructions below to install Voyager.

Python Install

Node.js install.

In addition to the Python dependencies, you need to install the following Node.js packages:

Minecraft Instance Install

Voyager depends on Minecraft game. You need to install Minecraft game and set up a Minecraft instance.

Follow the instructions in Minecraft Login Tutorial to set up your Minecraft Instance.

Fabric Mods Install

You need to install fabric mods to support all the features in Voyager. Remember to use the correct Fabric version of all the mods.

Follow the instructions in Fabric Mods Install to install the mods.

Getting Started

Voyager uses OpenAI's GPT-4 as the language model. You need to have an OpenAI API key to use Voyager. You can get one from here .

After the installation process, you can run Voyager by:

- If you are running with Azure Login for the first time, it will ask you to follow the command line instruction to generate a config file.

- Select Singleplayer and press Create New World .

- Set Game Mode to Creative and Difficulty to Peaceful .

- After the world is created, press Esc key and press Open to LAN .

- Select Allow cheats: ON and press Start LAN World . You will see the bot join the world soon.

Resume from a checkpoint during learning

If you stop the learning process and want to resume from a checkpoint later, you can instantiate Voyager by:

Run Voyager for a specific task with a learned skill library

If you want to run Voyager for a specific task with a learned skill library, you should first pass the skill library directory to Voyager:

Then, you can run task decomposition. Notice: Occasionally, the task decomposition may not be logical. If you notice the printed sub-goals are flawed, you can rerun the decomposition.

Finally, you can run the sub-goals with the learned skill library:

For all valid skill libraries, see Learned Skill Libraries .

If you have any questions, please check our FAQ first before opening an issue.

Paper and Citation

If you find our work useful, please consider citing us!

Disclaimer: This project is strictly for research purposes, and not an official product from NVIDIA.

Contributors 12

- JavaScript 66.5%

- Python 33.5%

Behold Nvidia's Giant New Voyager Building

The graphics and AI company's 750,000-square-foot building is designed to give employees a good place to work. CNET got the exclusive photographic tour.

Nvidia Voyager Building's Base Camp

Nvidia's Voyager building is designed to be a place where employees are eager to show up for work. Immediately after entering the 750,000-square-foot building at the graphics and AI chipmaker's Santa Clara, California, campus, you see its "base camp" a reception area. It's at the foot of the darker "mountain" that climbs upward behind it.

Approaching Nvidia Voyager Building

The walkway leading from Nvidia's older Endeavor building to the newer Voyager is lined with trees and shaded by solar panels on aerial structures called the "trellis."

Nvidia Voyager Building Front Facade

The towering glass front of Nvidia's Voyager building reflects the "trellis" outdoors that provides shade to the front of the building.

Nvidia Voyager Building's Mountain

The central part of Voyager is the "mountain," where employees can meet, work and gaze at the view. A stairway leads up the mountain's front face, and "valleys" to either side separate it from more conventional offices.

Nvidia Voyager's Green Walls

In Nvidia's Voyager building, walls covered with native plants give the mountain a more organic look, freshen the air and absorb sound.

Nvidia Endeavor and Voyager Buildings From Above

Nvidia's Santa Clara headquarters includes its 500,000-square-foot Endeavor building, left, and newer 750,000-square-foot Voyager to the right. A private walkway connects Endeavor to other Nvidia buildings out of view to the right.

Nvidia Voyager Building

From the top of the Nvidia Voyager building's mountain, you can see the stairway, the "base camp" reception area and the building's glass front.

Nvidia Voyager Building Roof

Far overhead in Nvidia's Voyager building is a roof pierced with many triangular skylights. The geometrical patterns are a nod to the wireframes at the heart of Nvidia's computer graphics business, but the effect is used sparingly compared with the overwhelmingly polygonal styling of Nvidia's earlier Endeavor building next door.

Nvidia Voyager Valleys

"Valleys" divide the mountain, right, from more conventional offices while allowing natural light to penetrate to the ground floor. Booths and tables are open for employees to meet or eat lunch.

Nvidia Voyager Building's Volcanic Plug

Atop the Voyager building's mountain is a multifaceted black structure reminiscent of a basalt from an extinct volcano. Nvidia had to reshape it several times to get the facets to show properly.

Nvidia Voyager Building, Back of the Mountain

The back of Voyager features an amphitheater where employees can watch events like company meetings.

Nvidia Voyager Building's Caldera

A long live-edge table is at the center of a cozy recessed area called the caldera near the top of the mountain in Nvidia's Voyager building. In real-world geophysics, a caldera is a sunken crater left after an eruption empties a volcano's magma chamber.

This view looks upward from the stage area of the amphitheater up the back of the "mountain" in Nvidia's Voyager building.

Under the Mountain in Nvidia Voyager

This almost subterranean channel tunnels through the mountain like a lava tube.

Nvidia Voyager Building Verdure

Creeping plants are trained to grow up wires to provide a green backdrop for events held on the back of the mountain area of Nvidia's Voyager building.

Nvidia Voyager Building Valley

Nvidia's Voyager building uses different colors to distinguish the dark mountain from the lighter conventional offices on the other side of the "valley."

Nvidia Voyager Building Tunnels

Unusual lighting gives otherwise ordinary corridors a fresh look deep beneath the mountain at the center of Nvidia's Voyager building.

Nvidia Voyager Building Bird Nest

Outside Nvidia's Voyager building are elevated "bird nests" where people can work and meet.

Nvidia Voyager Building's Trellis

Outside Nvidia's Voyager building is the "trellis," a canopy covered with solar panels. They're packed more thickly to the right to shade the front glass facade of the building. The panels turned out to be more susceptible to winds than expected, requiring stronger supports.

Nvidia Voyager Building Gardens

Four acres of garden separate Nvidia's Voyager building, right, from the earlier Endeavor to the left.

Voyager's "bird nests" are equipped with tables, benches and Wi-Fi.

A stairway leads up the front face of the "mountain" at the center of Nvidia's Voyager building in Santa Clara, California.

More Galleries

My Favorite Shots From the Galaxy S24 Ultra's Camera

Honor's Magic V2 Foldable Is Lighter Than Samsung's Galaxy S24 Ultra

The Samsung Galaxy S24 and S24 Plus Looks Sweet in Aluminum

Samsung's Galaxy S24 Ultra Now Has a Titanium Design

I Took 600+ Photos With the iPhone 15 Pro and Pro Max. Look at My Favorites

Do You Know About These 17 Hidden iOS 17 Features?

AI or Not AI: Can You Spot the Real Photos?

Voyager: An Open-Ended Embodied Agent with Large Language Models

We introduce Voyager, the first LLM-powered embodied lifelong learning agent in Minecraft that continuously explores the world, acquires diverse skills, and makes novel discoveries without human intervention. Voyager consists of three key components: 1) an automatic curriculum that maximizes exploration, 2) an ever-growing skill library of executable code for storing and retrieving complex behaviors, and 3) a new iterative prompting mechanism that incorporates environment feedback, execution errors, and self-verification for program improvement. Voyager interacts with GPT-4 via blackbox queries, which bypasses the need for model parameter fine-tuning. The skills developed by Voyager are temporally extended, interpretable, and compositional, which compounds the agent's abilities rapidly and alleviates catastrophic forgetting. Empirically, Voyager shows strong in-context lifelong learning capability and exhibits exceptional proficiency in playing Minecraft. It obtains 3.3x more unique items, travels 2.3x longer distances, and unlocks key tech tree milestones up to 15.3x faster than prior SOTA. Voyager is able to utilize the learned skill library in a new Minecraft world to solve novel tasks from scratch, while other techniques struggle to generalize.

Introduction

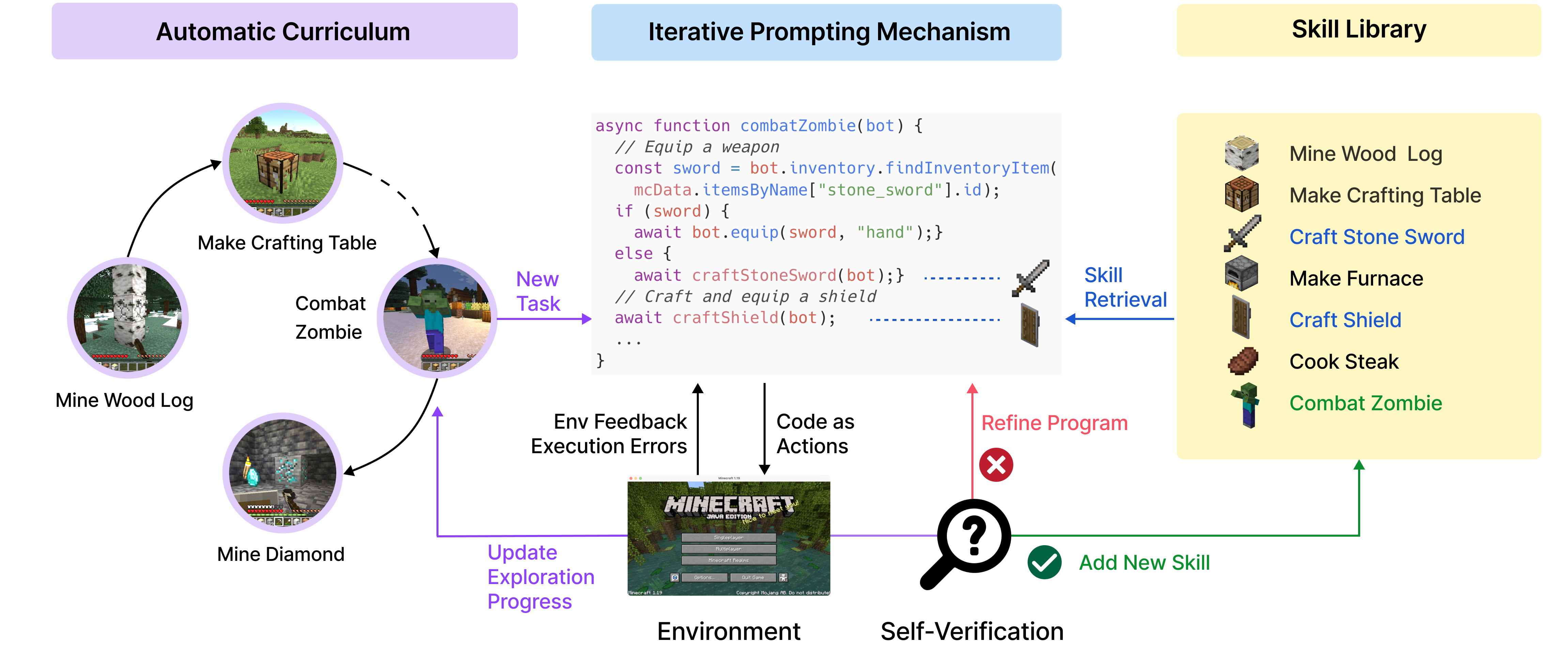

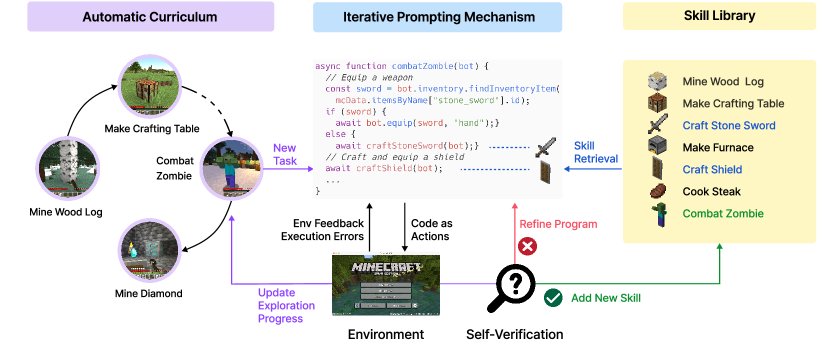

Voyager components.

Automatic Curriculum

Skill Library

Iterative Prompting Mechanism

Experiments

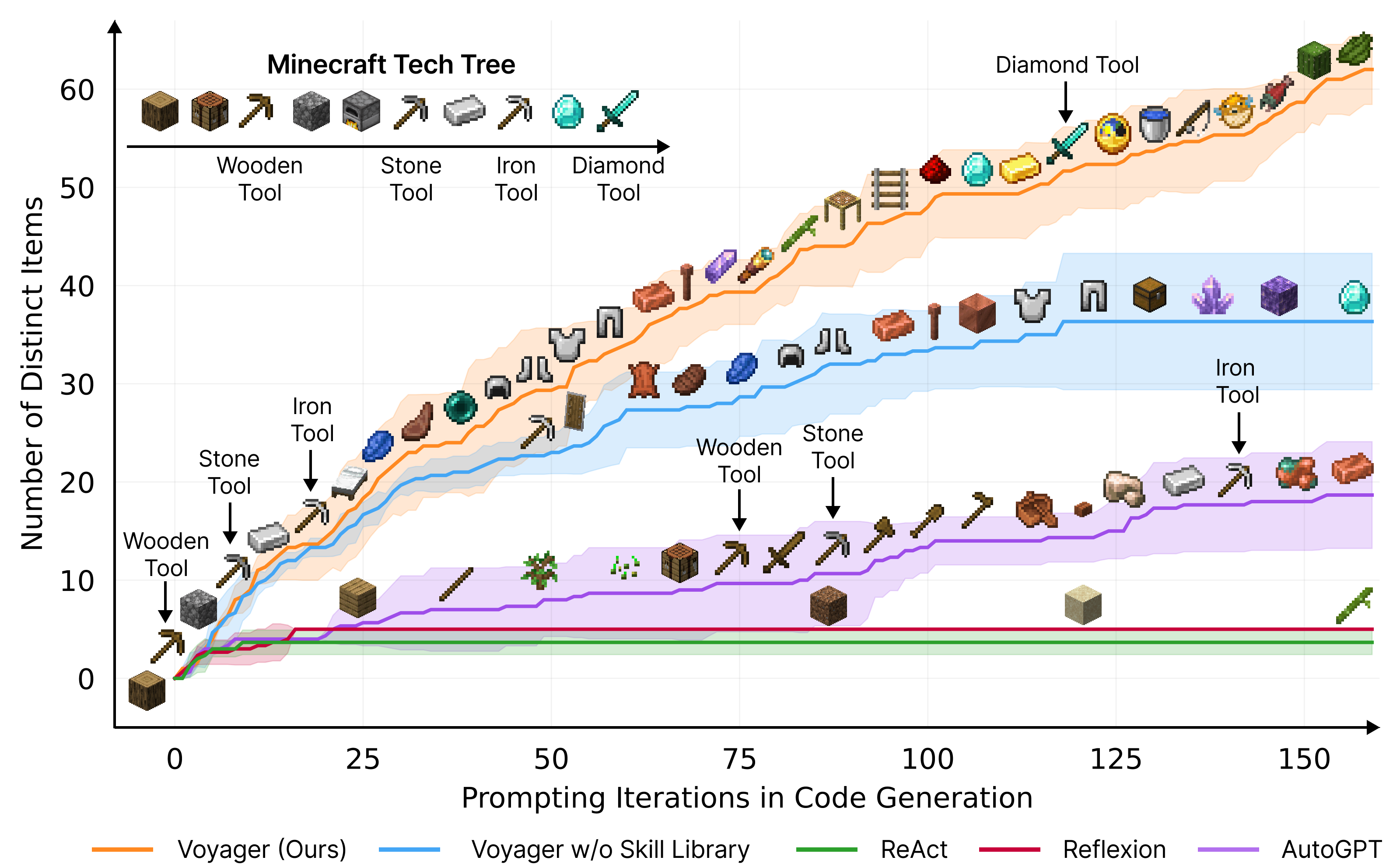

We systematically evaluate Voyager and baselines on their exploration performance, tech tree mastery, map coverage, and zero-shot generalization capability to novel tasks in a new world.

Significantly Better Exploration

Tech tree mastery.

Extensive Map Traversal

Efficient Zero-Shot Generalization to Unseen Tasks

Ablation Studies

In this work, we introduce Voyager, the first LLM-powered embodied lifelong learning agent, which leverages GPT-4 to explore the world continuously, develop increasingly sophisticated skills, and make new discoveries consistently without human intervention. Voyager exhibits superior performance in discovering novel items, unlocking the Minecraft tech tree, traversing diverse terrains, and applying its learned skill library to unseen tasks in a newly instantiated world. Voyager serves as a starting point to develop powerful generalist agents without tuning the model parameters.

Media Coverage

"They Plugged GPT-4 Into Minecraft—and Unearthed New Potential for AI. The bot plays the video game by tapping the text generator to pick up new skills, suggesting that the tech behind ChatGPT could automate many workplace tasks." - Will Knight, WIRED "The Voyager project shows, however, that by pairing GPT-4’s abilities with agent software that stores sequences that work and remembers what does not, developers can achieve stunning results." - John Koetsier, Forbes "Voyager, the GTP-4 bot that plays Minecraft autonomously and better than anyone else" - Ruetir "This AI used GPT-4 to become an expert Minecraft player" - Devin Coldewey, TechCrunch Coverage Index: [Atmarkit] [Career Engine] [Crast.net] [Daily Top Feeds] [Entrepreneur en Espanol] [Finance Jxyuging] [Forbes] [Forbes Argentina] [Gaming Deputy] [Gearrice] [Haberik] [Head Topics] [InfoQ] [ITmedia News] [Mark Tech Post] [Medium] [MSN] [Note] [Noticias de Hoy] [Ruetir] [Stock HK] [Tech Tribune France] [TechCrunch] [TechBeezer] [Toutiao] [US Times Post] [VN Explorer] [WIRED] [Zaker]

Nvidia Shares First Look Inside Massive New 'Voyager' Building

Voyager joins Endeavor to form Nvidia's Santa Clara HQ.

Nvidia recently opened up its 750,000 sq ft Voyager building. Consumer electronics news site CNet enjoyed access to the "colossal new building" which forms a major part of Nvidia's Santa Clara HQ.

With the completion of Voyager, joining the similarly impressive Endeavor, the office, meetings, and conference space have effectively been doubled. You may have twigged - yes, these two massive structures are named after Star Trek starships.

There was talk of Voyager and Endeavor being joined by a footbridge, wittily named the SLI Bridge, but that isn't mentioned in CNet's description. Between the two massifs, we see a four acre garden area with a trellis structure above dotted with solar panels. There are gaps in the trellis to provide light and shade for a plentiful array of benches, tables, and other social spaces beneath. In some images, you will see raised circular meeting 'nests' too.

Venturing into Voyager, a visitor can see the 'base camp' reception area. This area sits at the foot of a 'mountain' structure which has several tiers, interspersed with gardens, seating areas, cafes, offices, and so on. The mountain doesn't quite reach the pinnacle of the roof giving an impression of a big and airy open space – though you are indoors. The roof is interspersed with triangular natural light cutouts, which will be appreciated by the vegetation and humans alike.

The move away from boxy cubicle structures and work life permeates the whole building. Apparently, Nvidia CEO Jensen Huang wanted every employee working in Voyager to have a view, and work among "living walls, natural light, and towering windows." There are abundant outside and shared gathering spaces for working out of any allocated personal office space too.

There is an interesting reason for the impressive new Voyager building existing, other than being an HQ to impress in the manner and scale of rivals like Google , Oracle, and Apple. The space should entice employees who have become ingrained in WFH back to the office some more, and help Nvidia gain new talent - and keep them on board. However, the CNet report didn't mention one of the biggest draws of a welcoming workplace – the quality of the cafeteria.

For more pictures of Voyager, you can check out the source article. Nvidia has a gallery of renders and photos of both Voyager and Endeavor, too.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

Flexible Fiber LEDs made with perovskite quantum wires should enable advanced wearable displays and other technologies

The Royal Mint, official maker of British coins, begins mining gold from motherboards — a 40,000 square feet facility yields half a ton of gold per year

AMD RX 7600 XT vs RX 6750 XT GPU faceoff: Two $300 AMD cards duke it out for mainstream supremacy

- giorgiog Great, another "open" workspace - when will executives 'get' that engineers need a quiet, distraction free place (i.e. private offices) to work on hard problems for extended periods of time? It's like none of these people understand what 'flow' is. Reply

- View All 1 Comment

Most Popular

Linxi "Jim" Fan

Senior research scientist lead of ai agents, follow @drjimfan, hello there.

I am a Senior Research Scientist at NVIDIA and Lead of AI Agents Initiative. My mission is to build generally capable agents across physical worlds (robotics) and virtual worlds (games, simulation) . I share insights about AI research & industry extensively on Twitter/X and LinkedIn . Welcome to follow me!

My research explores the bleeding edge of multimodal foundation models, reinforcement learning, computer vision, and large-scale systems. I obtained my Ph.D. degree at Stanford Vision Lab , advised by Prof. Fei-Fei Li . Previously, I interned at OpenAI (w/ Ilya Sutskever and Andrej Karpathy), Baidu AI Labs (w/ Andrew Ng and Dario Amodei), and MILA (w/ Yoshua Bengio). I graduated as the Valedictorian of Class 2016 and received the Illig Medal at Columbia University.

I spearheaded Voyager (the first AI agent that plays Minecraft proficiently and bootstraps its capabilities continuously), MineDojo (open-ended agent learning by watching 100,000s of Minecraft YouTube videos), Eureka (a 5-finger robot hand doing extremely dexterous tasks like pen spinning), and VIMA (one of the earliest multimodal foundation models for robot manipulation). MineDojo won the Outstanding Paper Award at NeurIPS 2022. My works have been widely featured in news media, such as New York Times, Forbes, MIT Technology Review, TechCrunch, The WIRED, VentureBeat, etc.

Fun fact: I was OpenAI’s very first intern in 2016 . During that summer, I worked on World of Bits , an agent that perceives the web browser in pixels and outputs keyboard/mouse control. It was way before LLM became a thing at OpenAI. Good old times!

Research Highlights

Media Coverage

Publications.

Visit my Google Scholar page for a comprehensive listing!

- Conducting bleeding edge research on foundation models for general-purpose autonomous agents .

- Leading the MineDojo effort for open-ended agent learning in Minecraft.

- Mentoring interns on diverse research topics.

- Collaborating with universities: Stanford, Berkeley, Caltech, MIT, UW, etc.

- Proposed SECANT , a state-of-the-art policy learning algorithm for zero-shot generalization of visual agents to novel environments.

- Paper published at ICML 2021 .

- Created SURREAL , an open-source, full-stack, and high-performance distributed reinforcement learning (RL) framework for large-scale robot learning.

- Paper published at CoRL 2018 . Best Presentation Award finalist.

- Doctoral advisor: Prof. Fei-Fei Li .

- Ph.D. Thesis “ Training and Deploying Visual Agents at Scale ”.

- Co-designed World of Bits , an open-domain platform for teaching AI to use the web browser. World of Bits was part of the OpenAI Universe initiative.

- Paper published at ICML 2017 .

- Systematically analyzed and proposed novel variants of the Ladder Network, a strong semi-supervised deep learning technique.

- Mentored by Turing Award Laureate Yoshua Bengio .

- Paper published at ICML 2016 .

- Co-developed DeepSpeech 2 , a large-scale end-to-end system that achieved world-class performance on English and Chinese speech recognition.

- Mentored by Dario Amodei , Adam Coates , and Andrew Ng .

- DeepSpeech and derivative works have been featured in various media: MIT Technology Review , TechCrunch , Forbes , NPR , VentureBeats , etc.

- Columbia NLP Group , advised by Prof. Michael Collins. Studied kernel methods for speech recognition. Paper published in Journal of Machine Learning Research .

- Columbia Vision Lab , advised by Prof. Shree Nayar. Implemented a computer vision system in Matlab to infer astrophysics parameters from galactic images.

- Columbia CRIS Lab , advised by Prof. Venkat Venkatasubramanian. Developed ML and NLP techniques to automate ontology curation for pharmaceutical engineering. Paper published in Computers & Chemical Engineering .

- [email protected]

- Welcome to DM me!

NVIDIA Voyager

- Civil design and survey for entitlement of NVIDIA’s newest addition to the 36-acre campus redevelopment project.

- Provided engineering and surveying to support construction of the first two project phases: Endeavor & Voyager.

- LEED Gold certified.

- Project awarded 2022 SVBJ Structures honoree for Interiors Project.

NVIDIA's newest addition to their headquarters, deemed Voyager, shares its wild, distinctly NVIDIA design inspired by the Pacific Northwest’s lush, mountainous terrain. The 750,000 sq ft building is designed to give employees an enjoyable and collaborative place to work. During the development of plans and during construction, Kier + Wright worked closely with NVIDIA, the general contractor, and the City of Santa Clara to ensure that public infrastructure was maintained while existing utilities were relocated, and new utilities were constructed. During the first phase of this corporate campus, Endeavor, K+W minimized the amount of rework that was done to coordinate and plan for the second phase, Voyager. The biggest challenge faced was modifications to the Endeavor project, specifically the locations of the biotreatment ponds that were relocated to the courtyard located between these two projects. K+W relocated four biotreatment ponds that were engineered for Endeavor, and seamlessly integrated them into the courtyard between these two campuses for an optimal approach that would work for both Endeavor and Voyager. These modifications were accomplished by having high levels of communication and coordination with NVIDIA, the City of Santa Clara, and the rest of the project team. The integration of biotreatment ponds, zero harvesting or infiltration throughout the project helped contribute to Voyager's LEED Gold certification. K+W also designed and implemented a retaining wall around the site to help minimize the project’s earthwork. This helped save the client money and raised the site to reduce the exported dirt. Expansion was designed with the vision and goal for future expansion while creating a cohesive campus that encourages collaboration inside and outside as well as employee health and wellness through sustainable features.

To learn more about this project, please view the video above.

NVIDIA Voyager Santa Clara, CA

- Corporate Campus

- Civil Engineering

- Site Development

- Utility Design

- Sustainability

- Street + Roadway

- Construction Administration

- Land Surveying

- Topographic Survey

- Construction Staking

- ALTA Land Title Survey

Silicon Valley Business Journal | Nvidia Voyager is the 2022 Structures honoree for Interiors Project

Tom’s Hardware | Nvidia Shares First Look Inside Massive New ‘Voyager’ Building

Venture Beat | Nvidia became a $1 trillion company thanks to AI. Look inside its lavish ‘Star Trek’-inspired HQ

Yahoo/Dornob | Nvidia’s Voyager HQ Seduces WFH Employees Back with a Dazzling Design

Related Projects

Nvidia – Voyager

Voyager is Nvidia’s latest 750,000 square foot addition to the 500,000 square foot Endeavor building, bringing the total headquarters to 1.25 million square feet. Possessing the same cosmic, distinct design as the first building, Voyager is linked to Endeavor by a treelined walkway shaded by a trellis of photovoltaic panels.

The 4-story, 68′ tall Voyager structure has a sloped glass curtain wall perimeter to allow abundant natural light, expansive views, and live plants to build a strong connection to nature, blurring the boundary between inside and outside. Enclos’ 170,000 square foot scope of work includes the glazed facets comprised of 14′ by 4′ glass cassette panels attached to horizontal secondary steel supports between the primary steel columns. The double-paned insulated glass units mitigate heat gain, glare, and shadow while permitting sufficient daylight to the interior for occupant comfort. At the main entrance, 7′ wide by 13′ tall vertical cassette units attach to architecturally exposed structural steel horizontal supports between primary columns. The main entrance wall also incorporates entry doors.

Enclos was responsible for the design-assist, testing and mock-up requirements, procurement, and construction of the façade.

Designed for LEED Gold certification, natural light is the primary lighting source during the day, supporting the 40% energy-saving goals.

project specs

Facade area:, general contractor:, facade consultant:, related projects.

One Tabor Center

Nortel Networks

Bloomberg Tower

Spectrum Terrace

Voyager : An Open-Ended Embodied Agent with Large Language Models

We introduce Voyager , the first LLM-powered embodied lifelong learning agent in Minecraft that continuously explores the world, acquires diverse skills, and makes novel discoveries without human intervention. Voyager consists of three key components: 1) an automatic curriculum that maximizes exploration, 2) an ever-growing skill library of executable code for storing and retrieving complex behaviors, and 3) a new iterative prompting mechanism that incorporates environment feedback, execution errors, and self-verification for program improvement. Voyager interacts with GPT-4 via blackbox queries, which bypasses the need for model parameter fine-tuning. The skills developed by Voyager are temporally extended, interpretable, and compositional, which compounds the agent’s abilities rapidly and alleviates catastrophic forgetting. Empirically, Voyager shows strong in-context lifelong learning capability and exhibits exceptional proficiency in playing Minecraft. It obtains 3.3 × 3.3\times more unique items, travels 2.3 × 2.3\times longer distances, and unlocks key tech tree milestones up to 15.3 × 15.3\times faster than prior SOTA. Voyager is able to utilize the learned skill library in a new Minecraft world to solve novel tasks from scratch, while other techniques struggle to generalize.

1 Introduction

Building generally capable embodied agents that continuously explore, plan, and develop new skills in open-ended worlds is a grand challenge for the AI community [ 1 , 2 , 3 , 4 , 5 ] . Classical approaches employ reinforcement learning (RL) [ 6 , 7 ] and imitation learning [ 8 , 9 , 10 ] that operate on primitive actions, which could be challenging for systematic exploration [ 11 , 12 , 13 , 14 , 15 ] , interpretability [ 16 , 17 , 18 ] , and generalization [ 19 , 20 , 21 ] . Recent advances in large language model (LLM) based agents harness the world knowledge encapsulated in pre-trained LLMs to generate consistent action plans or executable policies [ 16 , 22 , 19 ] . They are applied to embodied tasks like games and robotics [ 23 , 24 , 25 , 26 , 27 ] , as well as NLP tasks without embodiment [ 28 , 29 , 30 ] . However, these agents are not lifelong learners that can progressively acquire, update, accumulate, and transfer knowledge over extended time spans [ 31 , 32 ] .

Let us consider Minecraft as an example. Unlike most other games studied in AI [ 33 , 34 , 10 ] , Minecraft does not impose a predefined end goal or a fixed storyline but rather provides a unique playground with endless possibilities [ 23 ] . Minecraft requires players to explore vast, procedurally generated 3D terrains and unlock a tech tree using gathered resources. Human players typically start by learning the basics, such as mining wood and cooking food, before advancing to more complex tasks like combating monsters and crafting diamond tools. We argue that an effective lifelong learning agent should have similar capabilities as human players: (1) propose suitable tasks based on its current skill level and world state, e.g., learn to harvest sand and cactus before iron if it finds itself in a desert rather than a forest; (2) refine skills based on environmental feedback and commit mastered skills to memory for future reuse in similar situations (e.g. fighting zombies is similar to fighting spiders); (3) continually explore the world and seek out new tasks in a self-driven manner.

Towards these goals, we introduce Voyager , the first LLM-powered embodied lifelong learning agent to drive exploration, master a wide range of skills, and make new discoveries continually without human intervention in Minecraft. Voyager is made possible through three key modules (Fig. 2 ): 1) an automatic curriculum that maximizes exploration; 2) a skill library for storing and retrieving complex behaviors; and 3) a new iterative prompting mechanism that generates executable code for embodied control. We opt to use code as the action space instead of low-level motor commands because programs can naturally represent temporally extended and compositional actions [ 16 , 22 ] , which are essential for many long-horizon tasks in Minecraft. Voyager interacts with a blackbox LLM (GPT-4 [ 35 ] ) through prompting and in-context learning [ 36 , 37 , 38 ] . Our approach bypasses the need for model parameter access and explicit gradient-based training or finetuning.

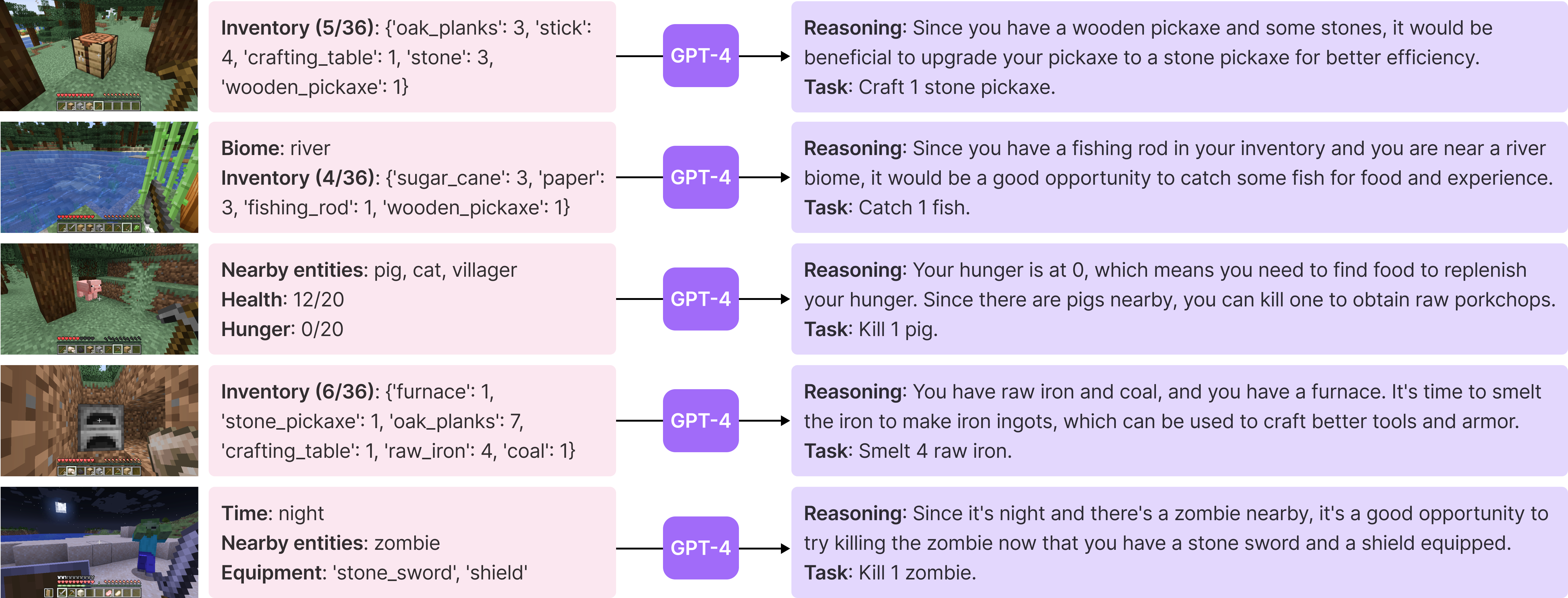

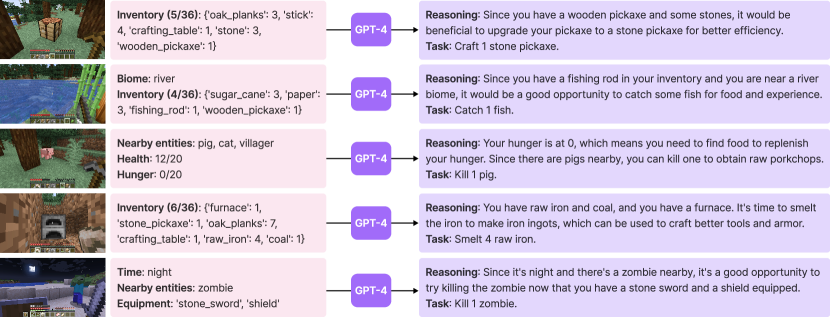

More specifically, Voyager attempts to solve progressively harder tasks proposed by the automatic curriculum , which takes into account the exploration progress and the agent’s state. The curriculum is generated by GPT-4 based on the overarching goal of “discovering as many diverse things as possible”. This approach can be perceived as an in-context form of novelty search [ 39 , 40 ] . Voyager incrementally builds a skill library by storing the action programs that help solve a task successfully. Each program is indexed by the embedding of its description, which can be retrieved in similar situations in the future. Complex skills can be synthesized by composing simpler programs, which compounds Voyager ’s capabilities rapidly over time and alleviates catastrophic forgetting in other continual learning methods [ 31 , 32 ] .

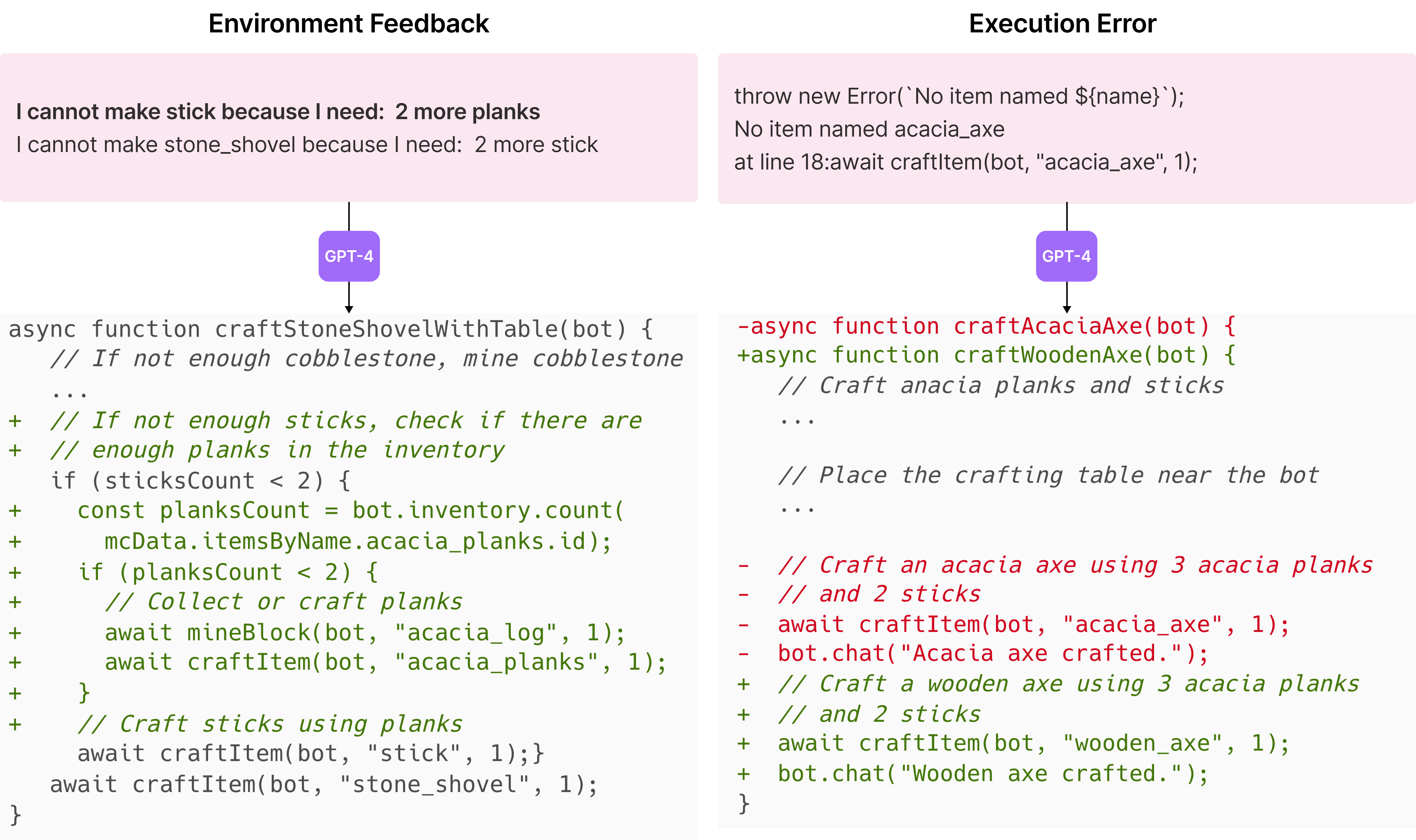

However, LLMs struggle to produce the correct action code consistently in one shot [ 41 ] . To address this challenge, we propose an iterative prompting mechanism that: (1) executes the generated program to obtain observations from the Minecraft simulation (such as inventory listing and nearby creatures) and error trace from the code interpreter (if any); (2) incorporates the feedback into GPT-4’s prompt for another round of code refinement; and (3) repeats the process until a self-verification module confirms the task completion, at which point we commit the program to the skill library (e.g., craftStoneShovel() and combatZombieWithSword() ) and query the automatic curriculum for the next milestone (Fig. 2 ).

Empirically, Voyager demonstrates strong in-context lifelong learning capabilities. It can construct an ever-growing skill library of action programs that are reusable, interpretable, and generalizable to novel tasks. We evaluate Voyager systematically against other LLM-based agent techniques (e.g., ReAct [ 29 ] , Reflexion [ 30 ] , AutoGPT [ 28 ] ) in MineDojo [ 23 ] , an open-source Minecraft AI framework. Voyager outperforms prior SOTA by obtaining 3.3 × 3.3\times more unique items, unlocking key tech tree milestones up to 15.3 × 15.3\times faster, and traversing 2.3 × 2.3\times longer distances. We further demonstrate that Voyager is able to utilize the learned skill library in a new Minecraft world to solve novel tasks from scratch, while other methods struggle to generalize.

Voyager consists of three novel components: (1) an automatic curriculum (Sec. 2.1 ) that suggests objectives for open-ended exploration, (2) a skill library (Sec. 2.2 ) for developing increasingly complex behaviors, and (3) an iterative prompting mechanism (Sec. 2.3 ) that generates executable code for embodied control. Full prompts are presented in Appendix, Sec. A .

2.1 Automatic Curriculum

Embodied agents encounter a variety of objectives with different complexity levels in open-ended environments. An automatic curriculum offers numerous benefits for open-ended exploration, ensuring a challenging but manageable learning process, fostering curiosity-driven intrinsic motivation for agents to learn and explore, and encouraging the development of general and flexible problem-solving strategies [ 42 , 43 , 44 ] . Our automatic curriculum capitalizes on the internet-scale knowledge contained within GPT-4 by prompting it to provide a steady stream of new tasks or challenges. The curriculum unfolds in a bottom-up fashion, allowing for considerable adaptability and responsiveness to the exploration progress and the agent’s current state (Fig. 3 ). As Voyager progresses to harder self-driven goals, it naturally learns a variety of skills, such as “mining a diamond”.

The input prompt to GPT-4 consists of several components:

Directives encouraging diverse behaviors and imposing constraints , such as “ My ultimate goal is to discover as many diverse things as possible ... The next task should not be too hard since I may not have the necessary resources or have learned enough skills to complete it yet. ”;

The agent’s current state , including inventory, equipment, nearby blocks and entities, biome, time, health and hunger bars, and position;

Previously completed and failed tasks , reflecting the agent’s current exploration progress and capabilities frontier;

Additional context : We also leverage GPT-3.5 to self-ask questions based on the agent’s current state and exploration progress and self-answer questions. We opt to use GPT-3.5 instead of GPT-4 for standard NLP tasks due to budgetary considerations.

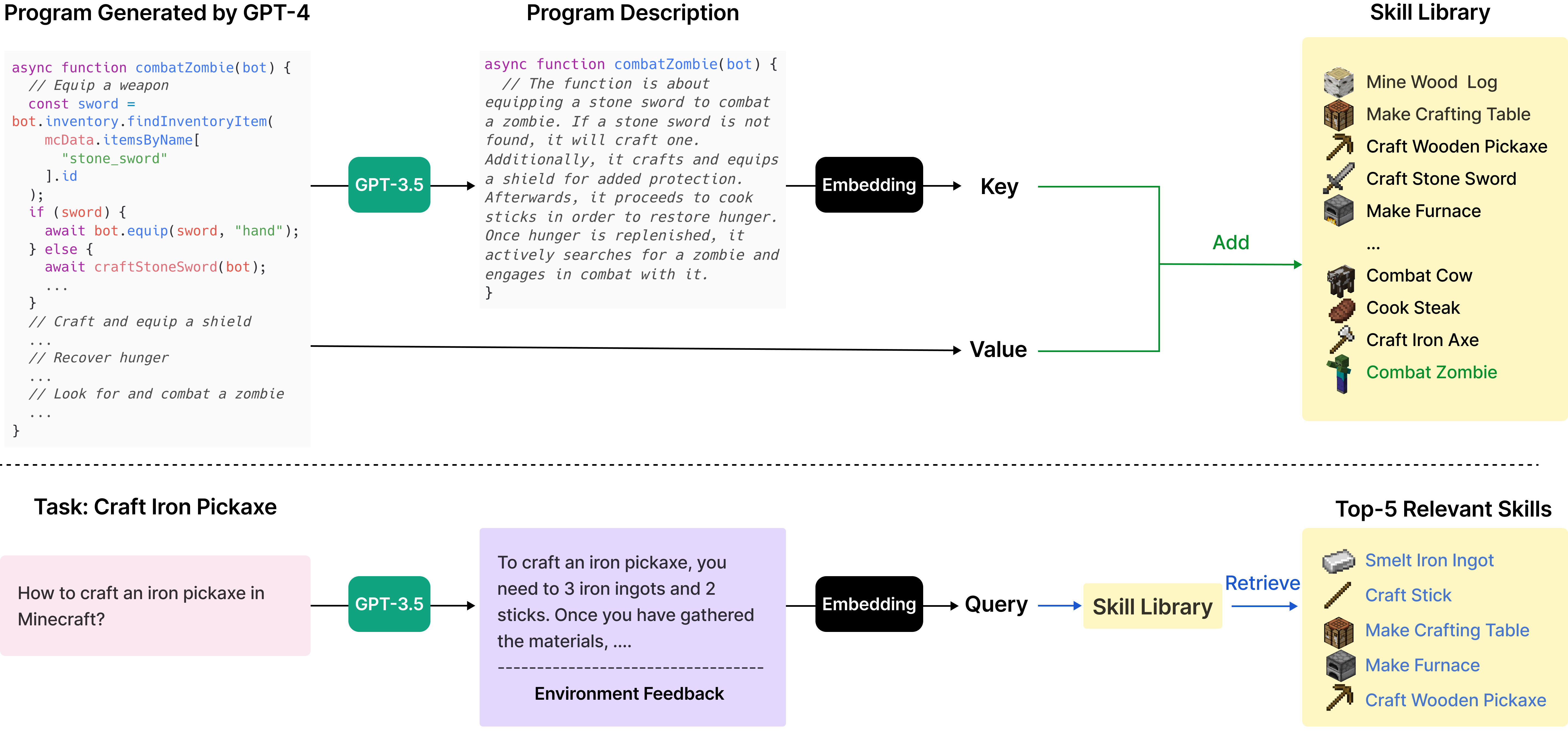

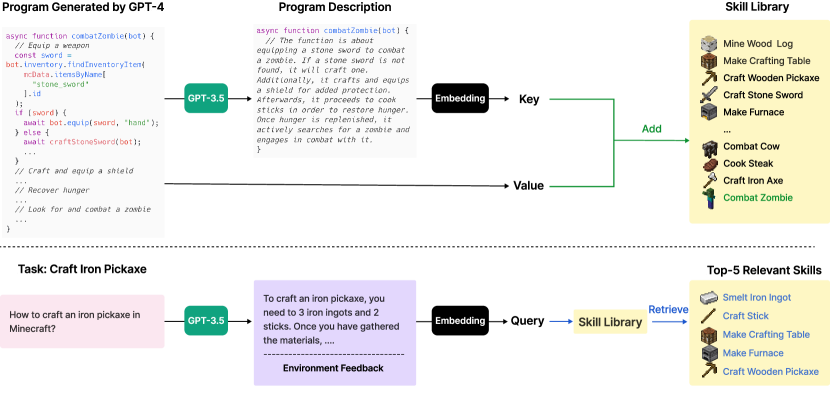

2.2 Skill Library

With the automatic curriculum consistently proposing increasingly complex tasks, it is essential to have a skill library that serves as a basis for learning and evolution. Inspired by the generality, interpretability, and universality of programs [ 45 ] , we represent each skill with executable code that scaffolds temporally extended actions for completing a specific task proposed by the automatic curriculum.

The input prompt to GPT-4 consists of the following components:

Guidelines for code generation , such as “ Your function will be reused for building more complex functions. Therefore, you should make it generic and reusable. ”;

Control primitive APIs, and relevant skills retrieved from the skill library, which are crucial for in-context learning [ 36 , 37 , 38 ] to work well;

The generated code from the last round, environment feedback, execution errors, and critique , based on which GPT-4 can self-improve (Sec. 2.3 );

Chain-of-thought prompting [ 46 ] to do reasoning before code generation.

We iteratively refine the program through a novel iterative prompting mechanism (Sec. 2.3 ), incorporate it into the skill library as a new skill, and index it by the embedding of its description (Fig. 4 , top). For skill retrieval, we query the skill library with the embedding of self-generated task plans and environment feedback (Fig. 4 , bottom). By continuously expanding and refining the skill library, Voyager can learn, adapt, and excel in a wide spectrum of tasks, consistently pushing the boundaries of its capabilities in the open world.

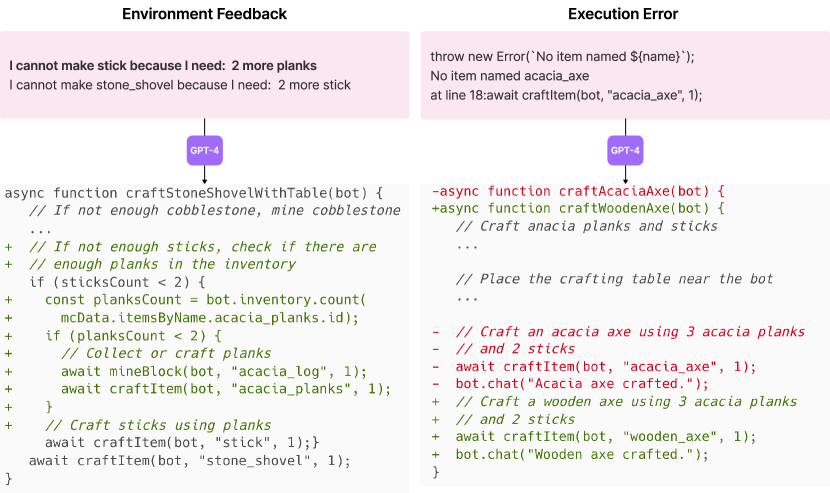

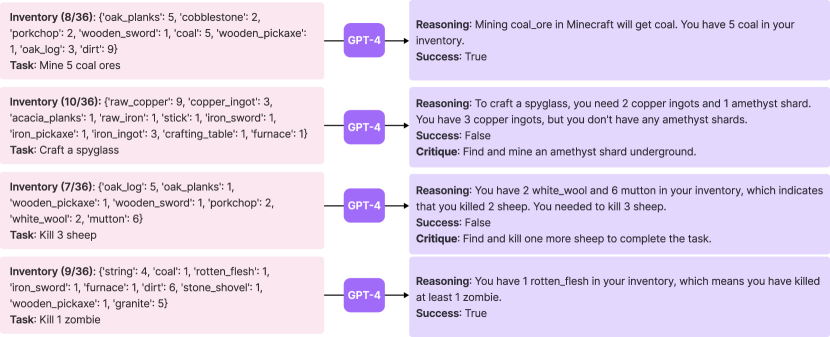

2.3 Iterative Prompting Mechanism

We introduce an iterative prompting mechanism for self-improvement through three types of feedback:

Environment feedback , which illustrates the intermediate progress of program execution (Fig. 5 , left). For example, “ I cannot make an iron chestplate because I need: 7 more iron ingots ” highlights the cause of failure in crafting an iron chestplate. We use bot.chat() inside control primitive APIs to generate environment feedback and prompt GPT-4 to use this function as well during code generation;

Execution errors from the program interpreter that reveal any invalid operations or syntax errors in programs, which are valuable for bug fixing (Fig. 5 , right);

Self-verification for checking task success. Instead of manually coding success checkers for each new task proposed by the automatic curriculum, we instantiate another GPT-4 agent for self-verification. By providing Voyager ’s current state and the task to GPT-4, we ask it to act as a critic [ 47 , 48 , 49 ] and inform us whether the program achieves the task. In addition, if the task fails, it provides a critique by suggesting how to complete the task (Fig. 6 ). Hence, our self-verification is more comprehensive than self-reflection [ 30 ] by both checking success and reflecting on mistakes.

During each round of code generation, we execute the generated program to obtain environment feedback and execution errors from the code interpreter, which are incorporated into GPT-4’s prompt for the next round of code refinement. This iterative process repeats until self-verification validates the task’s completion, at which point we add this new skill to the skill library and ask the automatic curriculum for a new objective (Fig. 2 ). If the agent gets stuck after 4 rounds of code generation, then we query the curriculum for another task. This iterative prompting approach significantly improves program synthesis for embodied control, enabling Voyager to continuously acquire diverse skills without human intervention.

3 Experiments

3.1 experimental setup.

We leverage OpenAI’s gpt-4-0314 [ 35 ] and gpt-3.5-turbo-0301 [ 50 ] APIs for text completion, along with text-embedding-ada-002 [ 51 ] API for text embedding. We set all temperatures to 0 except for the automatic curriculum, which uses temperature = = 0.1 to encourage task diversity. Our simulation environment is built on top of MineDojo [ 23 ] and leverages Mineflayer [ 52 ] JavaScript APIs for motor controls. See Appendix, Sec. B.1 for more details.

3.2 Baselines

Because there is no LLM-based agents that work out of the box for Minecraft, we make our best effort to select a number of representative algorithms as baselines. These methods are originally designed only for NLP tasks without embodiment, therefore we have to re-interpret them to be executable in MineDojo and compatible with our experimental setting:

ReAct [ 29 ] uses chain-of-thought prompting [ 46 ] by generating both reasoning traces and action plans with LLMs. We provide it with our environment feedback and the agent states as observations.

Reflexion [ 30 ] is built on top of ReAct [ 29 ] with self-reflection to infer more intuitive future actions. We provide it with execution errors and our self-verification module.

AutoGPT [ 28 ] is a popular software tool that automates NLP tasks by decomposing a high-level goal into multiple subgoals and executing them in a ReAct-style loop. We re-implement AutoGPT by using GPT-4 to do task decomposition and provide it with the agent states, environment feedback, and execution errors as observations for subgoal execution. Compared with Voyager , AutoGPT lacks the skill library for accumulating knowledge, self-verification for assessing task success, and automatic curriculum for open-ended exploration.

Note that we do not directly compare with prior methods that take Minecraft screen pixels as input and output low-level controls [ 53 , 54 , 55 ] . It would not be an apple-to-apple comparison, because we rely on the high-level Mineflayer [ 52 ] API to control the agent. Our work’s focus is on pushing the limits of GPT-4 for lifelong embodied agent learning, rather than solving the 3D perception or sensorimotor control problems. Voyager is orthogonal and can be combined with gradient-based approaches like VPT [ 8 ] as long as the controller provides a code API. We make a system-level comparison between Voyager and prior Minecraft agents in Table. A.2 .

3.3 Evaluation Results

We systematically evaluate Voyager and baselines on their exploration performance, tech tree mastery, map coverage, and zero-shot generalization capability to novel tasks in a new world.

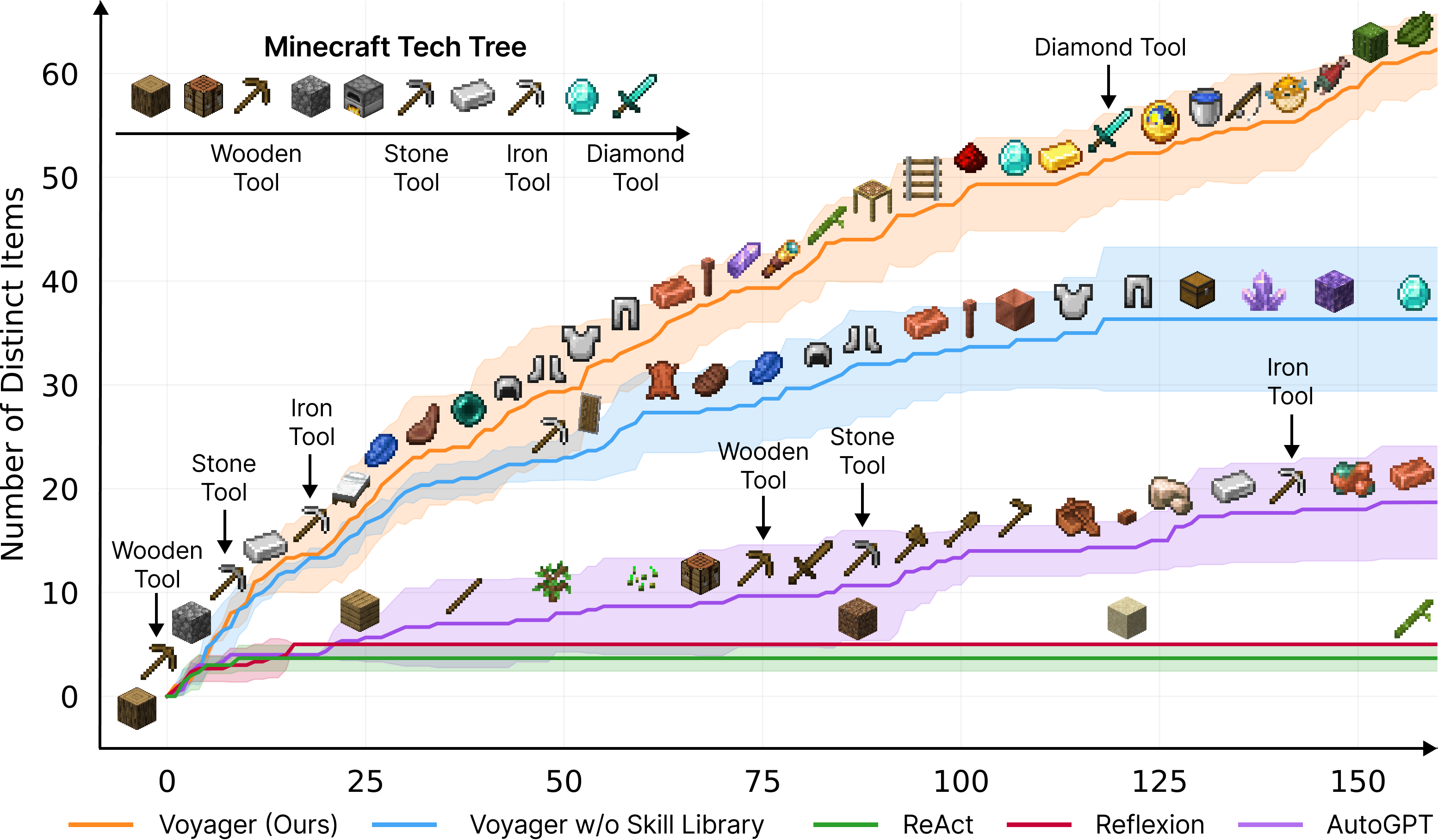

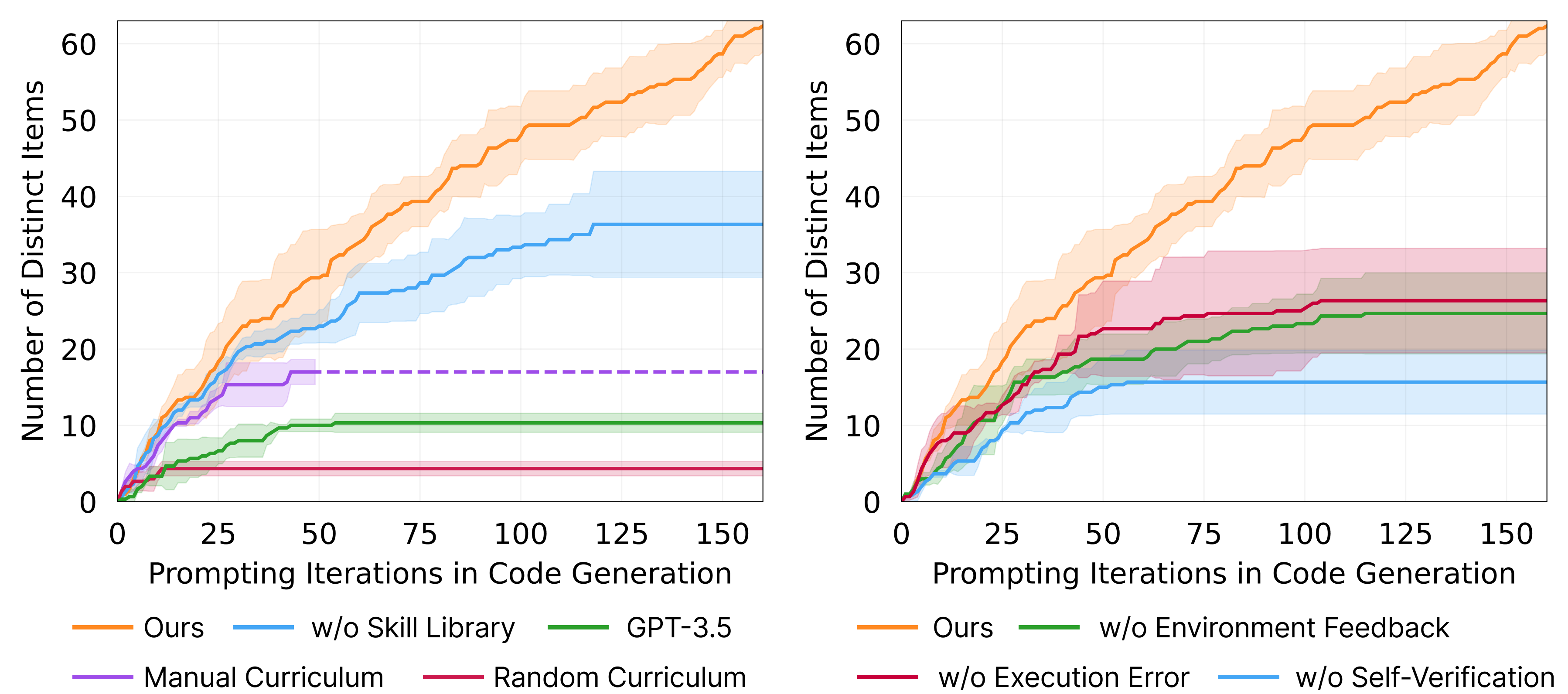

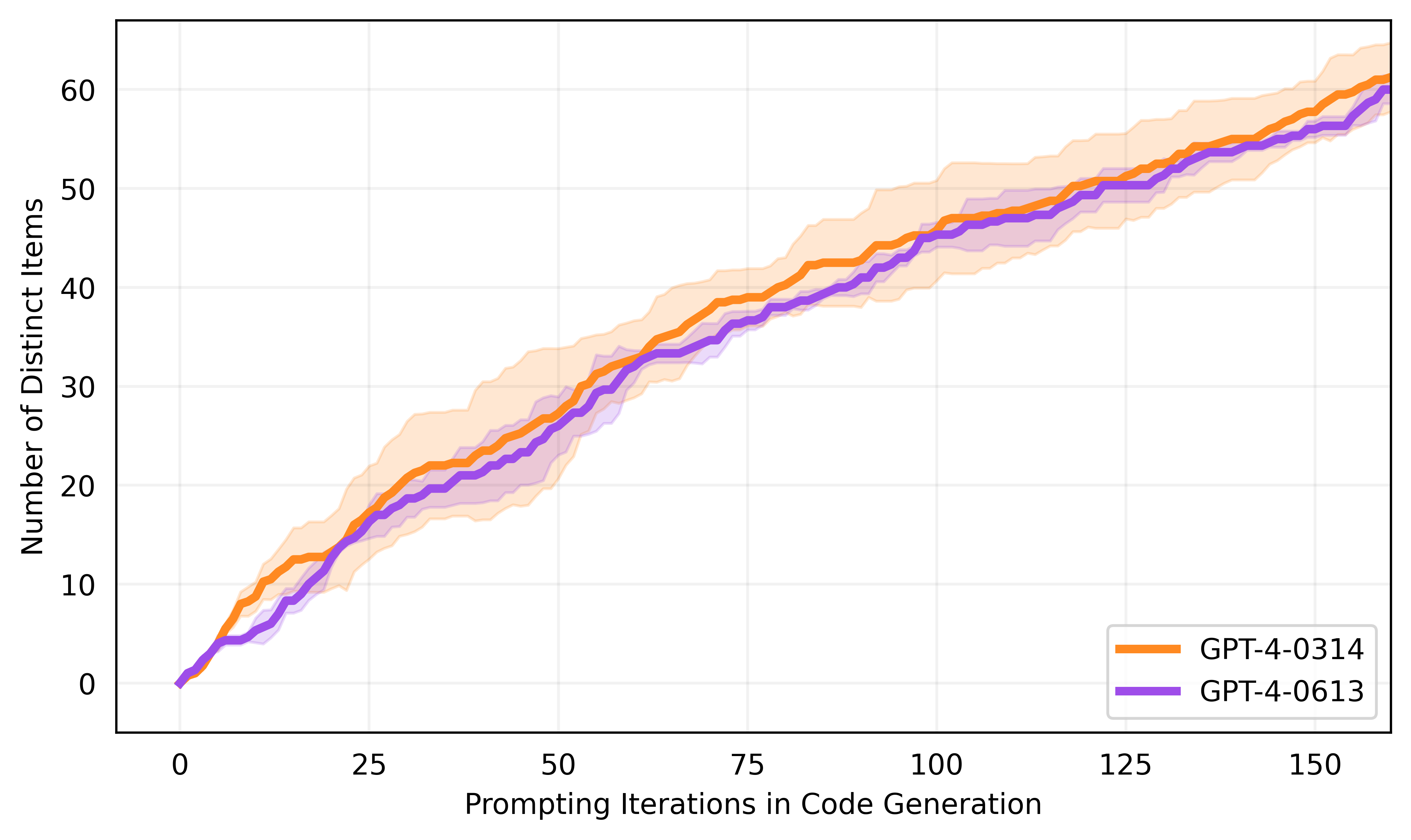

Significantly better exploration. Results of exploration performance are shown in Fig. 1 . Voyager ’s superiority is evident in its ability to consistently make new strides, discovering 63 unique items within 160 prompting iterations, 3.3 × 3.3\times many novel items compared to its counterparts. On the other hand, AutoGPT lags considerably in discovering new items, while ReAct and Reflexion struggle to make significant progress, given the abstract nature of the open-ended exploration goal that is challenging to execute without an appropriate curriculum.

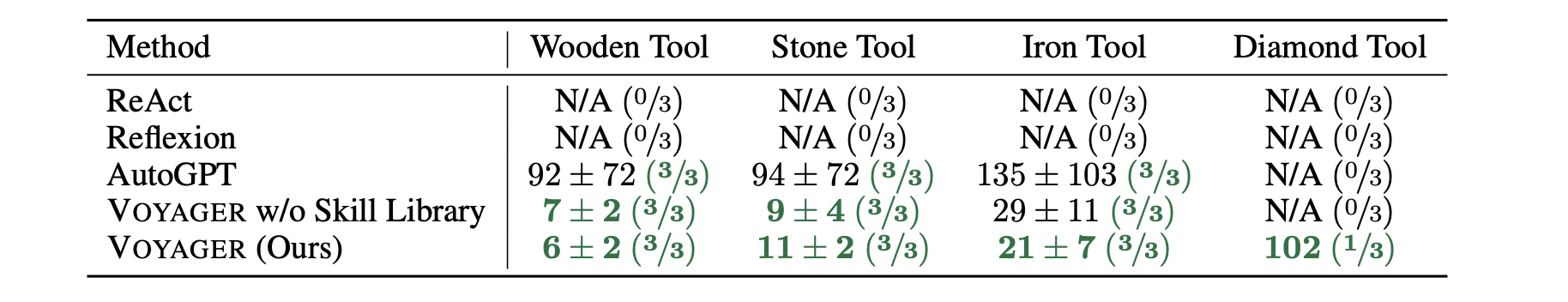

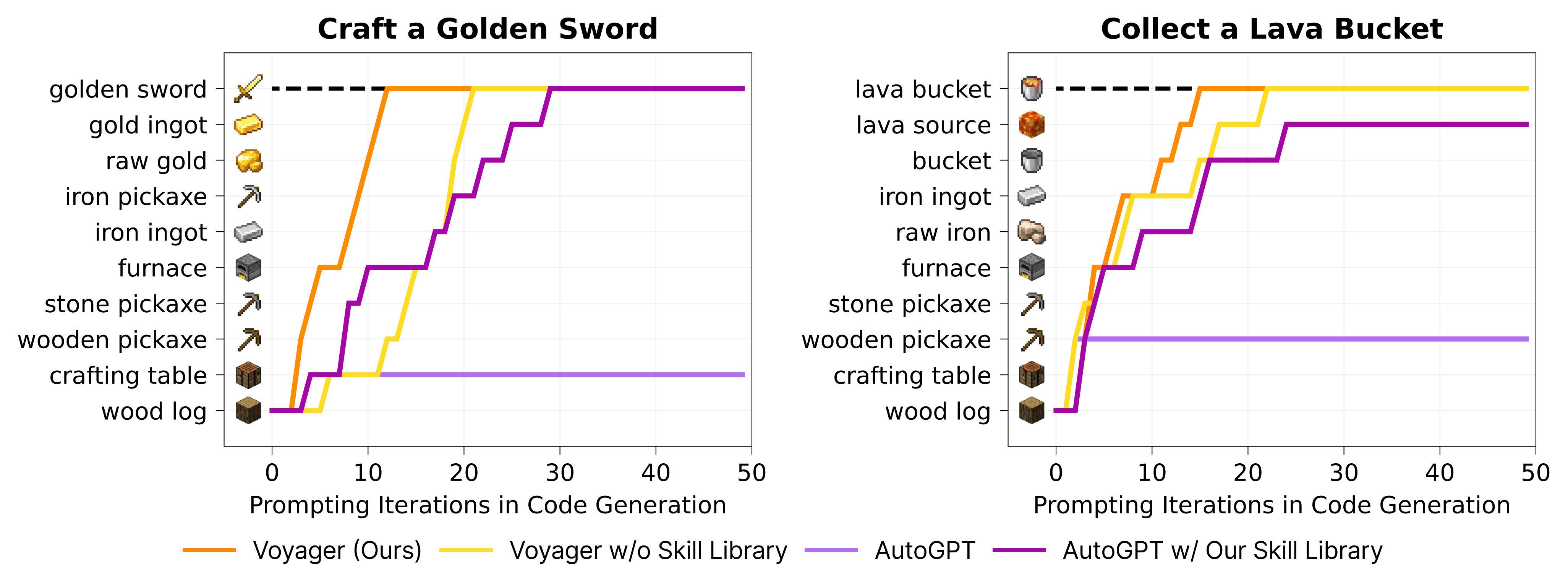

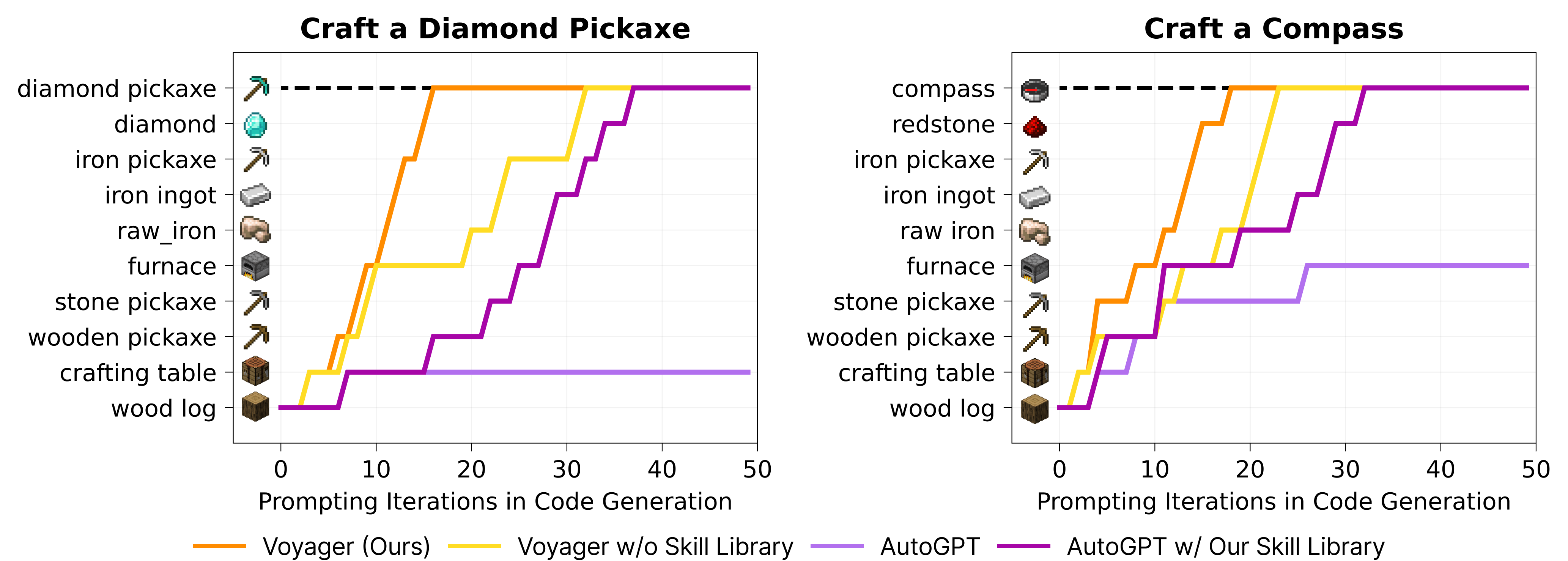

Consistent tech tree mastery. The Minecraft tech tree tests the agent’s ability to craft and use a hierarchy of tools. Progressing through this tree (wooden tool → → \rightarrow stone tool → → \rightarrow iron tool → → \rightarrow diamond tool) requires the agent to master systematic and compositional skills. Compared with baselines, Voyager unlocks the wooden level 15.3 × 15.3\times faster (in terms of the prompting iterations), the stone level 8.5 × 8.5\times faster, the iron level 6.4 × 6.4\times faster, and Voyager is the only one to unlock the diamond level of the tech tree (Fig. 2 and Table. 1 ). This underscores the effectiveness of the automatic curriculum, which consistently presents challenges of suitable complexity to facilitate the agent’s progress.

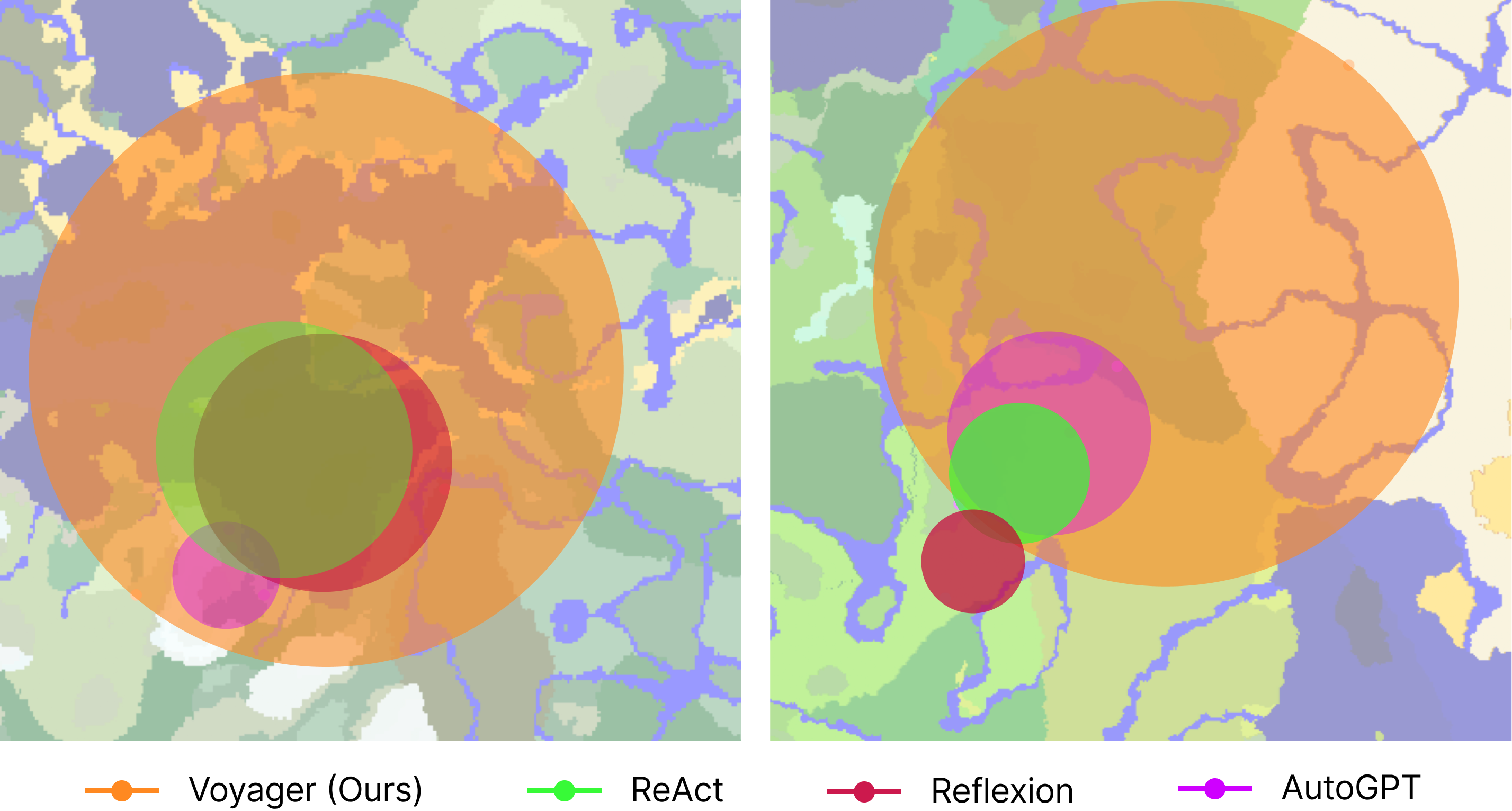

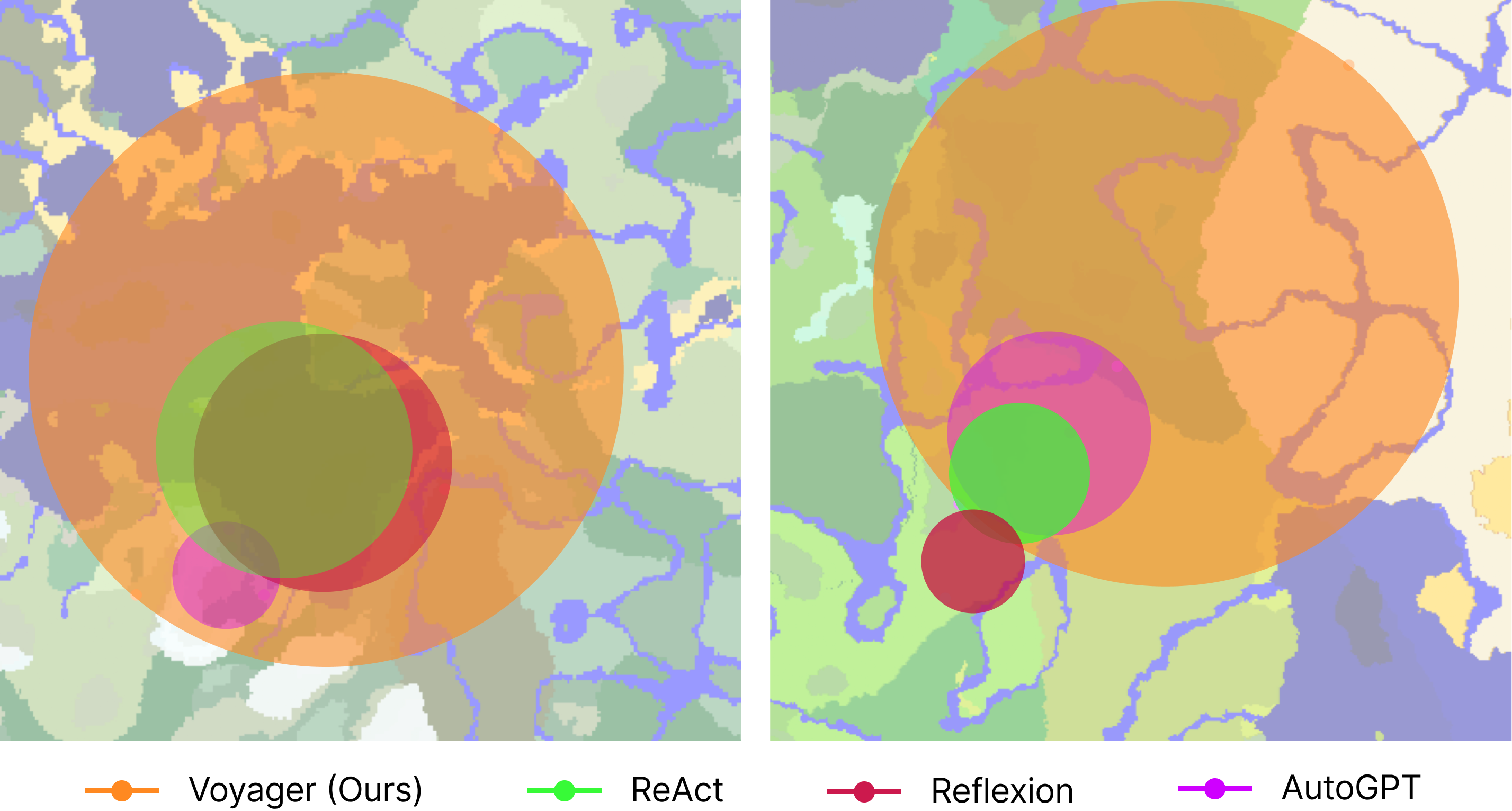

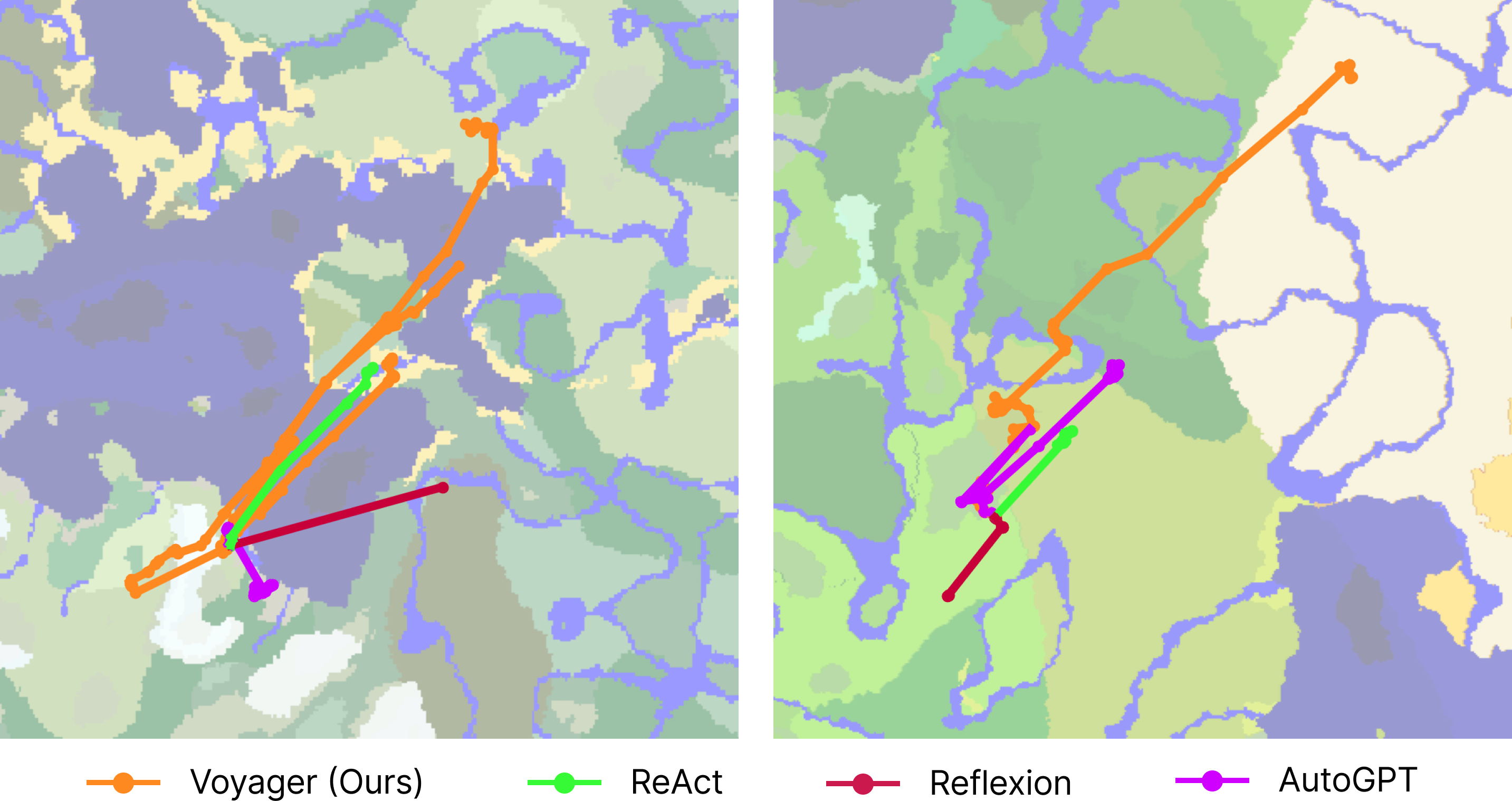

Extensive map traversal. Voyager is able to navigate distances 2.3 × 2.3\times longer compared to baselines by traversing a variety of terrains, while the baseline agents often find themselves confined to local areas, which significantly hampers their capacity to discover new knowledge (Fig. 7 ).

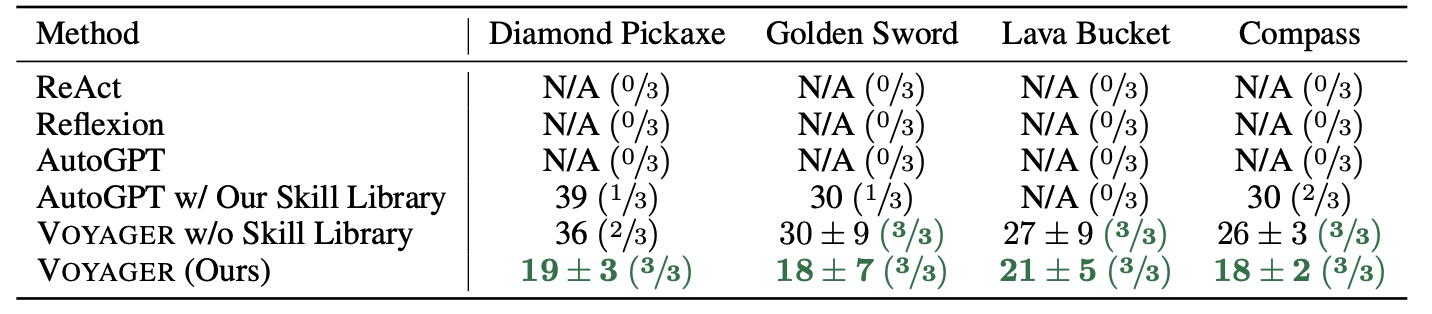

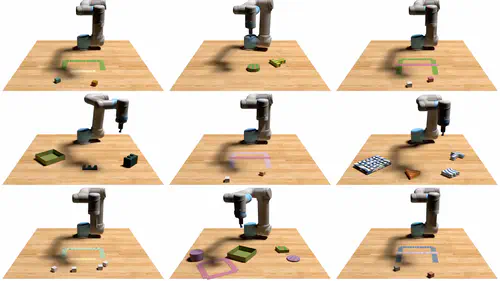

Efficient zero-shot generalization to unseen tasks. To evaluate zero-shot generalization, we clear the agent’s inventory, reset it to a newly instantiated world, and test it with unseen tasks. For both Voyager and AutoGPT, we utilize GPT-4 to break down the task into a series of subgoals. Table. 2 and Fig. 8 show Voyager can consistently solve all the tasks, while baselines cannot solve any task within 50 prompting iterations. What’s interesting to note is that our skill library constructed from lifelong learning not only enhances Voyager ’s performance but also gives a boost to AutoGPT. This demonstrates that the skill library serves as a versatile tool that can be readily employed by other methods, effectively acting as a plug-and-play asset to enhance performance.

3.4 Ablation Studies

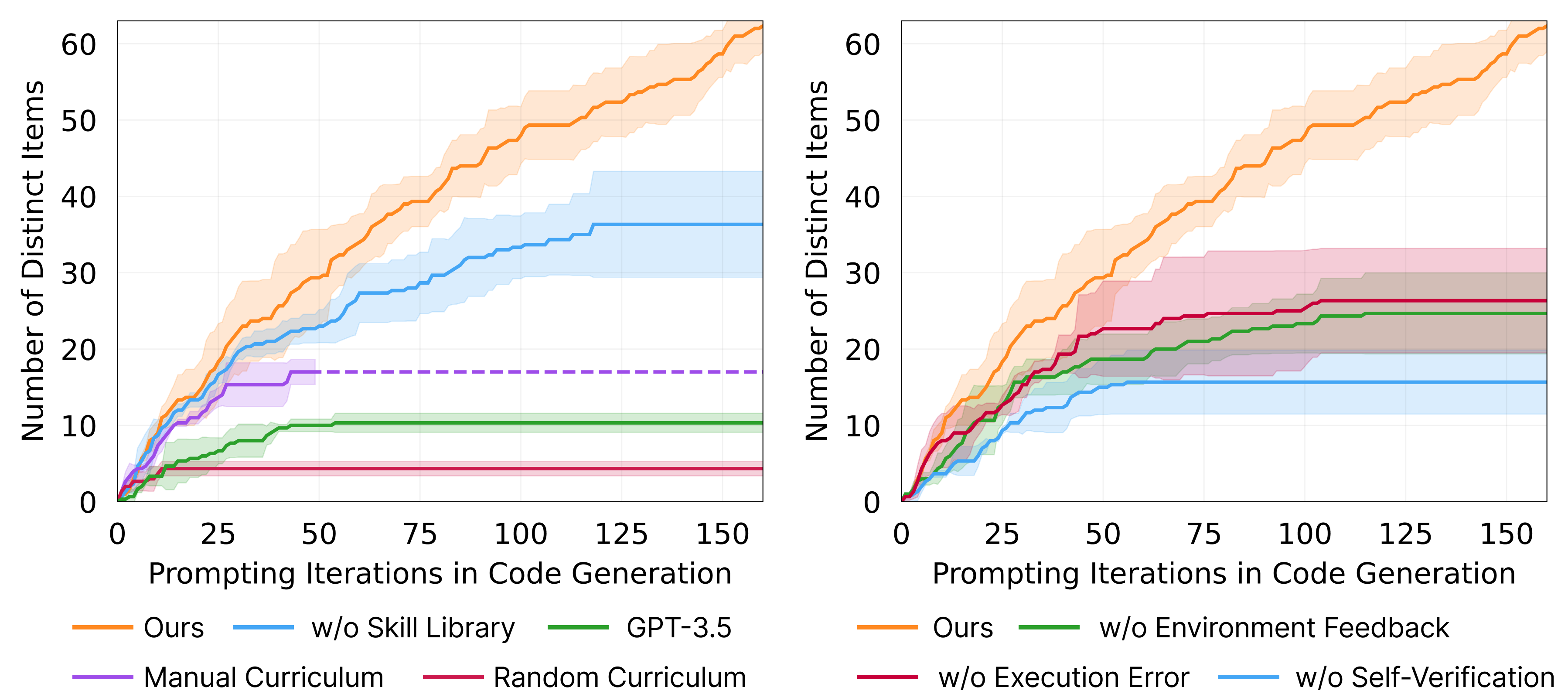

We ablate 6 design choices (automatic curriculum, skill library, environment feedback, execution errors, self-verification, and GPT-4 for code generation) in Voyager and study their impact on exploration performance (see Appendix, Sec. B.3 for details of each ablated variant). Results are shown in Fig. 9 . We highlight the key findings below:

Automatic curriculum is crucial for the agent’s consistent progress. The discovered item count drops by 93 % percent 93 93\% if the curriculum is replaced with a random one, because certain tasks may be too challenging if attempted out of order. On the other hand, a manually designed curriculum requires significant Minecraft-specific expertise, and does not take into account the agent’s live situation. It falls short in the experimental results compared to our automatic curriculum.

Voyager w/o skill library exhibits a tendency to plateau in the later stages. This underscores the pivotal role that the skill library plays in Voyager . It helps create more complex actions and steadily pushes the agent’s boundaries by encouraging new skills to be built upon older ones.

Self-verification is the most important among all the feedback types . Removing the module leads to a significant drop ( − 73 % percent 73 -73\% ) in the discovered item count. Self-verification serves as a critical mechanism to decide when to move on to a new task or reattempt a previously unsuccessful task.

GPT-4 significantly outperforms GPT-3.5 in code generation and obtains 5.7 × 5.7\times more unique items, as GPT-4 exhibits a quantum leap in coding abilities. This finding corroborates recent studies in the literature [ 56 , 57 ] .

3.5 Multimodal Feedback from Humans

Voyager does not currently support visual perception, because the available version of GPT-4 API is text-only at the time of this writing. However, Voyager has the potential to be augmented by multimodal perception models [ 58 , 59 ] to achieve more impressive tasks. We demonstrate that given human feedback, Voyager is able to construct complex 3D structures in Minecraft, such as a Nether Portal and a house (Fig. 10 ). There are two ways to integrate human feedback:

Human as a critic (equivalent to Voyager ’s self-verification module): humans provide visual critique to Voyager , allowing it to modify the code from the previous round. This feedback is essential for correcting certain errors in the spatial details of a 3D structure that Voyager cannot perceive directly.

Human as a curriculum (equivalent to Voyager ’s automatic curriculum module): humans break down a complex building task into smaller steps, guiding Voyager to complete them incrementally. This approach improves Voyager ’s ability to handle more sophisticated 3D construction tasks.

4 Limitations and Future Work

Cost. The GPT-4 API incurs significant costs. It is 15 × 15\times more expensive than GPT-3.5. Nevertheless, Voyager requires the quantum leap in code generation quality from GPT-4 (Fig. 9 ), which GPT-3.5 and open-source LLMs cannot provide [ 60 ] .

Inaccuracies. Despite the iterative prompting mechanism, there are still cases where the agent gets stuck and fails to generate the correct skill. The automatic curriculum has the flexibility to reattempt this task at a later time. Occasionally, self-verification module may also fail, such as not recognizing spider string as a success signal of beating a spider.

Hallucinations. The automatic curriculum occasionally proposes unachievable tasks. For example, it may ask the agent to craft a “copper sword" or “copper chestplate", which are items that do not exist within the game. Hallucinations also occur during the code generation process. For instance, GPT-4 tends to use cobblestone as a fuel input, despite being an invalid fuel source in the game. Additionally, it may call functions absent in the provided control primitive APIs, leading to code execution errors.

We are confident that improvements in the GPT API models as well as novel techniques for finetuning open-source LLMs will overcome these limitations in the future.

5 Related work

Decision-making agents in minecraft..

Minecraft is an open-ended 3D world with incredibly flexible game mechanics supporting a broad spectrum of activities. Built upon notable Minecraft benchmarks [ 23 , 61 , 62 , 63 , 64 , 65 ] , Minecraft learning algorithms can be divided into two categories: 1) Low-level controller: Many prior efforts leverage hierarchical reinforcement learning to learn from human demonstrations [ 66 , 67 , 68 ] . Kanitscheider et al. [ 14 ] design a curriculum based on success rates, but its objectives are limited to curated items. MineDojo [ 23 ] and VPT [ 8 ] utilize YouTube videos for large-scale pre-training. DreamerV3 [ 69 ] , on the other hand, learns a world model to explore the environment and collect diamonds. 2) High-level planner: Volum et al. [ 70 ] leverage few-shot prompting with Codex [ 41 ] to generate executable policies, but they require additional human interaction. Recent works leverage LLMs as a high-level planner in Minecraft by decomposing a high-level task into several subgoals following Minecraft recipes [ 55 , 53 , 71 ] , thus lacking full exploration flexibility. Like these latter works, Voyager also uses LLMs as a high-level planner by prompting GPT-4 and utilizes Mineflayer [ 52 ] as a low-level controller following Volum et al. [ 70 ] . Unlike prior works, Voyager employs an automatic curriculum that unfolds in a bottom-up manner, driven by curiosity, and therefore enables open-ended exploration.

Large Language Models for Agent Planning.

Inspired by the strong emergent capabilities of LLMs, such as zero-shot prompting and complex reasoning [ 72 , 37 , 38 , 36 , 73 , 74 ] , embodied agent research [ 75 , 76 , 77 , 78 ] has witnessed a significant increase in the utilization of LLMs for planning purposes. Recent efforts can be roughly classified into two groups. 1) Large language models for robot learning: Many prior works apply LLMs to generate subgoals for robot planning [ 27 , 27 , 25 , 79 , 80 ] . Inner Monologue [ 26 ] incorporates environment feedback for robot planning with LLMs. Code as Policies [ 16 ] and ProgPrompt [ 22 ] directly leverage LLMs to generate executable robot policies. VIMA [ 19 ] and PaLM-E [ 59 ] fine-tune pre-trained LLMs to support multimodal prompts. 2) Large language models for text agents: ReAct [ 29 ] leverages chain-of-thought prompting [ 46 ] and generates both reasoning traces and task-specific actions with LLMs. Reflexion [ 30 ] is built upon ReAct [ 29 ] with self-reflection to enhance reasoning. AutoGPT [ 28 ] is a popular tool that automates NLP tasks by crafting a curriculum of multiple subgoals for completing a high-level goal while incorporating ReAct [ 29 ] ’s reasoning and acting loops. DERA [ 81 ] frames a task as a dialogue between two GPT-4 [ 35 ] agents. Generative Agents [ 82 ] leverages ChatGPT [ 50 ] to simulate human behaviors by storing agents’ experiences as memories and retrieving those for planning, but its agent actions are not executable. SPRING [ 83 ] is a concurrent work that uses GPT-4 to extract game mechanics from game manuals, based on which it answers questions arranged in a directed acyclic graph and predicts the next action. All these works lack a skill library for developing more complex behaviors, which are crucial components for the success of Voyager in lifelong learning.

Code Generation with Execution.

Code generation has been a longstanding challenge in NLP [ 41 , 84 , 85 , 73 , 37 ] , with various works leveraging execution results to improve program synthesis. Execution-guided approaches leverage intermediate execution outcomes to guide program search [ 86 , 87 , 88 ] . Another line of research utilizes majority voting to choose candidates based on their execution performance [ 89 , 90 ] . Additionally, LEVER [ 91 ] trains a verifier to distinguish and reject incorrect programs based on execution results. CLAIRIFY [ 92 ] , on the other hand, generates code for planning chemistry experiments and makes use of a rule-based verifier to iteratively provide error feedback to LLMs. Voyager distinguishes itself from these works by integrating environment feedback, execution errors, and self-verification (to assess task success) into an iterative prompting mechanism for embodied control.

6 Conclusion

In this work, we introduce Voyager , the first LLM-powered embodied lifelong learning agent, which leverages GPT-4 to explore the world continuously, develop increasingly sophisticated skills, and make new discoveries consistently without human intervention. Voyager exhibits superior performance in discovering novel items, unlocking the Minecraft tech tree, traversing diverse terrains, and applying its learned skill library to unseen tasks in a newly instantiated world. Voyager serves as a starting point to develop powerful generalist agents without tuning the model parameters.

7 Broader Impacts

Our research is conducted within Minecraft, a safe and harmless 3D video game environment. While Voyager is designed to be generally applicable to other domains, such as robotics, its application to physical robots would require additional attention and the implementation of safety constraints by humans to ensure responsible and secure deployment.

8 Acknowledgements

We are extremely grateful to Ziming Zhu, Kaiyu Yang, Rafał Kocielnik, Colin White, Or Sharir, Sahin Lale, De-An Huang, Jean Kossaifi, Yuncong Yang, Charles Zhang, Minchao Huang, and many other colleagues and friends for their helpful feedback and insightful discussions. This work is done during Guanzhi Wang’s internship at NVIDIA. Guanzhi Wang is supported by the Kortschak fellowship in Computing and Mathematical Sciences at Caltech.

- [1] Eric Kolve, Roozbeh Mottaghi, Winson Han, Eli VanderBilt, Luca Weihs, Alvaro Herrasti, Daniel Gordon, Yuke Zhu, Abhinav Gupta, and Ali Farhadi. Ai2-thor: An interactive 3d environment for visual ai. arXiv preprint arXiv: Arxiv-1712.05474 , 2017.

- [2] Manolis Savva, Jitendra Malik, Devi Parikh, Dhruv Batra, Abhishek Kadian, Oleksandr Maksymets, Yili Zhao, Erik Wijmans, Bhavana Jain, Julian Straub, Jia Liu, and Vladlen Koltun. Habitat: A platform for embodied AI research. In 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Korea (South), October 27 - November 2, 2019 , pages 9338–9346. IEEE, 2019.

- [3] Yuke Zhu, Josiah Wong, Ajay Mandlekar, and Roberto Martín-Martín. robosuite: A modular simulation framework and benchmark for robot learning. arXiv preprint arXiv: Arxiv-2009.12293 , 2020.

- [4] Fei Xia, William B. Shen, Chengshu Li, Priya Kasimbeg, Micael Tchapmi, Alexander Toshev, Li Fei-Fei, Roberto Martín-Martín, and Silvio Savarese. Interactive gibson benchmark (igibson 0.5): A benchmark for interactive navigation in cluttered environments. arXiv preprint arXiv: Arxiv-1910.14442 , 2019.

- [5] Bokui Shen, Fei Xia, Chengshu Li, Roberto Martín-Martín, Linxi Fan, Guanzhi Wang, Claudia Pérez-D’Arpino, Shyamal Buch, Sanjana Srivastava, Lyne P. Tchapmi, Micael E. Tchapmi, Kent Vainio, Josiah Wong, Li Fei-Fei, and Silvio Savarese. igibson 1.0: a simulation environment for interactive tasks in large realistic scenes. arXiv preprint arXiv: Arxiv-2012.02924 , 2020.

- [6] Jens Kober, J Andrew Bagnell, and Jan Peters. Reinforcement learning in robotics: A survey. The International Journal of Robotics Research , 32(11):1238–1274, 2013.

- [7] Kai Arulkumaran, Marc Peter Deisenroth, Miles Brundage, and Anil Anthony Bharath. Deep reinforcement learning: A brief survey. IEEE Signal Processing Magazine , 34(6):26–38, 2017.

- [8] Bowen Baker, Ilge Akkaya, Peter Zhokhov, Joost Huizinga, Jie Tang, Adrien Ecoffet, Brandon Houghton, Raul Sampedro, and Jeff Clune. Video pretraining (vpt): Learning to act by watching unlabeled online videos. arXiv preprint arXiv: Arxiv-2206.11795 , 2022.

- [9] DeepMind Interactive Agents Team, Josh Abramson, Arun Ahuja, Arthur Brussee, Federico Carnevale, Mary Cassin, Felix Fischer, Petko Georgiev, Alex Goldin, Mansi Gupta, Tim Harley, Felix Hill, Peter C Humphreys, Alden Hung, Jessica Landon, Timothy Lillicrap, Hamza Merzic, Alistair Muldal, Adam Santoro, Guy Scully, Tamara von Glehn, Greg Wayne, Nathaniel Wong, Chen Yan, and Rui Zhu. Creating multimodal interactive agents with imitation and self-supervised learning. arXiv preprint arXiv: Arxiv-2112.03763 , 2021.

- [10] Oriol Vinyals, Igor Babuschkin, Junyoung Chung, Michael Mathieu, Max Jaderberg, Wojciech M Czarnecki, Andrew Dudzik, Aja Huang, Petko Georgiev, Richard Powell, et al. Alphastar: Mastering the real-time strategy game starcraft ii. DeepMind blog , 2, 2019.

- [11] Adrien Ecoffet, Joost Huizinga, Joel Lehman, Kenneth O. Stanley, and Jeff Clune. Go-explore: a new approach for hard-exploration problems. arXiv preprint arXiv: Arxiv-1901.10995 , 2019.

- [12] Joost Huizinga and Jeff Clune. Evolving multimodal robot behavior via many stepping stones with the combinatorial multiobjective evolutionary algorithm. Evolutionary computation , 30(2):131–164, 2022.

- [13] Rui Wang, Joel Lehman, Aditya Rawal, Jiale Zhi, Yulun Li, Jeffrey Clune, and Kenneth O. Stanley. Enhanced POET: open-ended reinforcement learning through unbounded invention of learning challenges and their solutions. In Proceedings of the 37th International Conference on Machine Learning, ICML 2020, 13-18 July 2020, Virtual Event , volume 119 of Proceedings of Machine Learning Research , pages 9940–9951. PMLR, 2020.

- [14] Ingmar Kanitscheider, Joost Huizinga, David Farhi, William Hebgen Guss, Brandon Houghton, Raul Sampedro, Peter Zhokhov, Bowen Baker, Adrien Ecoffet, Jie Tang, Oleg Klimov, and Jeff Clune. Multi-task curriculum learning in a complex, visual, hard-exploration domain: Minecraft. arXiv preprint arXiv: Arxiv-2106.14876 , 2021.

- [15] Michael Dennis, Natasha Jaques, Eugene Vinitsky, Alexandre M. Bayen, Stuart Russell, Andrew Critch, and Sergey Levine. Emergent complexity and zero-shot transfer via unsupervised environment design. In Hugo Larochelle, Marc’Aurelio Ranzato, Raia Hadsell, Maria-Florina Balcan, and Hsuan-Tien Lin, editors, Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual , 2020.

- [16] Jacky Liang, Wenlong Huang, Fei Xia, Peng Xu, Karol Hausman, Brian Ichter, Pete Florence, and Andy Zeng. Code as policies: Language model programs for embodied control. arXiv preprint arXiv: Arxiv-2209.07753 , 2022.

- [17] Shao-Hua Sun, Te-Lin Wu, and Joseph J. Lim. Program guided agent. In 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020 . OpenReview.net, 2020.

- [18] Zelin Zhao, Karan Samel, Binghong Chen, and Le Song. Proto: Program-guided transformer for program-guided tasks. In Marc’Aurelio Ranzato, Alina Beygelzimer, Yann N. Dauphin, Percy Liang, and Jennifer Wortman Vaughan, editors, Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, December 6-14, 2021, virtual , pages 17021–17036, 2021.

- [19] Yunfan Jiang, Agrim Gupta, Zichen Zhang, Guanzhi Wang, Yongqiang Dou, Yanjun Chen, Li Fei-Fei, Anima Anandkumar, Yuke Zhu, and Linxi (Jim) Fan. Vima: General robot manipulation with multimodal prompts. ARXIV.ORG , 2022.

- [20] Mohit Shridhar, Lucas Manuelli, and Dieter Fox. Cliport: What and where pathways for robotic manipulation. arXiv preprint arXiv: Arxiv-2109.12098 , 2021.

- [21] Linxi Fan, Guanzhi Wang, De-An Huang, Zhiding Yu, Li Fei-Fei, Yuke Zhu, and Animashree Anandkumar. SECANT: self-expert cloning for zero-shot generalization of visual policies. In Marina Meila and Tong Zhang, editors, Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18-24 July 2021, Virtual Event , volume 139 of Proceedings of Machine Learning Research , pages 3088–3099. PMLR, 2021.

- [22] Ishika Singh, Valts Blukis, Arsalan Mousavian, Ankit Goyal, Danfei Xu, Jonathan Tremblay, Dieter Fox, Jesse Thomason, and Animesh Garg. Progprompt: Generating situated robot task plans using large language models. arXiv preprint arXiv: Arxiv-2209.11302 , 2022.

- [23] Linxi Fan, Guanzhi Wang, Yunfan Jiang, Ajay Mandlekar, Yuncong Yang, Haoyi Zhu, Andrew Tang, De-An Huang, Yuke Zhu, and Anima Anandkumar. Minedojo: Building open-ended embodied agents with internet-scale knowledge. arXiv preprint arXiv: Arxiv-2206.08853 , 2022.

- [24] Andy Zeng, Adrian Wong, Stefan Welker, Krzysztof Choromanski, Federico Tombari, Aveek Purohit, Michael Ryoo, Vikas Sindhwani, Johnny Lee, Vincent Vanhoucke, and Pete Florence. Socratic models: Composing zero-shot multimodal reasoning with language. arXiv preprint arXiv: Arxiv-2204.00598 , 2022.

- [25] Michael Ahn, Anthony Brohan, Noah Brown, Yevgen Chebotar, Omar Cortes, Byron David, Chelsea Finn, Keerthana Gopalakrishnan, Karol Hausman, Alex Herzog, Daniel Ho, Jasmine Hsu, Julian Ibarz, Brian Ichter, Alex Irpan, Eric Jang, Rosario Jauregui Ruano, Kyle Jeffrey, Sally Jesmonth, Nikhil J Joshi, Ryan Julian, Dmitry Kalashnikov, Yuheng Kuang, Kuang-Huei Lee, Sergey Levine, Yao Lu, Linda Luu, Carolina Parada, Peter Pastor, Jornell Quiambao, Kanishka Rao, Jarek Rettinghouse, Diego Reyes, Pierre Sermanet, Nicolas Sievers, Clayton Tan, Alexander Toshev, Vincent Vanhoucke, Fei Xia, Ted Xiao, Peng Xu, Sichun Xu, and Mengyuan Yan. Do as i can, not as i say: Grounding language in robotic affordances. arXiv preprint arXiv: Arxiv-2204.01691 , 2022.

- [26] Wenlong Huang, Fei Xia, Ted Xiao, Harris Chan, Jacky Liang, Pete Florence, Andy Zeng, Jonathan Tompson, Igor Mordatch, Yevgen Chebotar, Pierre Sermanet, Noah Brown, Tomas Jackson, Linda Luu, Sergey Levine, Karol Hausman, and Brian Ichter. Inner monologue: Embodied reasoning through planning with language models. arXiv preprint arXiv: Arxiv-2207.05608 , 2022.

- [27] Wenlong Huang, Pieter Abbeel, Deepak Pathak, and Igor Mordatch. Language models as zero-shot planners: Extracting actionable knowledge for embodied agents. In Kamalika Chaudhuri, Stefanie Jegelka, Le Song, Csaba Szepesvári, Gang Niu, and Sivan Sabato, editors, International Conference on Machine Learning, ICML 2022, 17-23 July 2022, Baltimore, Maryland, USA , volume 162 of Proceedings of Machine Learning Research , pages 9118–9147. PMLR, 2022.

- [28] Significant-gravitas/auto-gpt: An experimental open-source attempt to make gpt-4 fully autonomous., 2023.

- [29] Shunyu Yao, Jeffrey Zhao, Dian Yu, Nan Du, Izhak Shafran, Karthik Narasimhan, and Yuan Cao. React: Synergizing reasoning and acting in language models. arXiv preprint arXiv: Arxiv-2210.03629 , 2022.

- [30] Noah Shinn, Beck Labash, and Ashwin Gopinath. Reflexion: an autonomous agent with dynamic memory and self-reflection. arXiv preprint arXiv: Arxiv-2303.11366 , 2023.

- [31] German Ignacio Parisi, Ronald Kemker, Jose L. Part, Christopher Kanan, and Stefan Wermter. Continual lifelong learning with neural networks: A review. Neural Networks , 113:54–71, 2019.

- [32] Liyuan Wang, Xingxing Zhang, Hang Su, and Jun Zhu. A comprehensive survey of continual learning: Theory, method and application. arXiv preprint arXiv: Arxiv-2302.00487 , 2023.

- [33] Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Alex Graves, Ioannis Antonoglou, Daan Wierstra, and Martin Riedmiller. Playing atari with deep reinforcement learning. arXiv preprint arXiv: Arxiv-1312.5602 , 2013.

- [34] OpenAI, :, Christopher Berner, Greg Brockman, Brooke Chan, Vicki Cheung, Przemysław Dębiak, Christy Dennison, David Farhi, Quirin Fischer, Shariq Hashme, Chris Hesse, Rafal Józefowicz, Scott Gray, Catherine Olsson, Jakub Pachocki, Michael Petrov, Henrique P. d. O. Pinto, Jonathan Raiman, Tim Salimans, Jeremy Schlatter, Jonas Schneider, Szymon Sidor, Ilya Sutskever, Jie Tang, Filip Wolski, and Susan Zhang. Dota 2 with large scale deep reinforcement learning. arXiv preprint arXiv: Arxiv-1912.06680 , 2019.

- [35] OpenAI. Gpt-4 technical report. arXiv preprint arXiv: Arxiv-2303.08774 , 2023.

- [36] Jason Wei, Yi Tay, Rishi Bommasani, Colin Raffel, Barret Zoph, Sebastian Borgeaud, Dani Yogatama, Maarten Bosma, Denny Zhou, Donald Metzler, Ed H. Chi, Tatsunori Hashimoto, Oriol Vinyals, Percy Liang, Jeff Dean, and William Fedus. Emergent abilities of large language models. arXiv preprint arXiv: Arxiv-2206.07682 , 2022.

- [37] Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. Language models are few-shot learners. In Hugo Larochelle, Marc’Aurelio Ranzato, Raia Hadsell, Maria-Florina Balcan, and Hsuan-Tien Lin, editors, Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual , 2020.

- [38] Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J. Liu. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. , 21:140:1–140:67, 2020.

- [39] Benjamin Eysenbach, Abhishek Gupta, Julian Ibarz, and Sergey Levine. Diversity is all you need: Learning skills without a reward function. In 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019 . OpenReview.net, 2019.

- [40] Edoardo Conti, Vashisht Madhavan, Felipe Petroski Such, Joel Lehman, Kenneth O. Stanley, and Jeff Clune. Improving exploration in evolution strategies for deep reinforcement learning via a population of novelty-seeking agents. In Samy Bengio, Hanna M. Wallach, Hugo Larochelle, Kristen Grauman, Nicolò Cesa-Bianchi, and Roman Garnett, editors, Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, December 3-8, 2018, Montréal, Canada , pages 5032–5043, 2018.

- [41] Mark Chen, Jerry Tworek, Heewoo Jun, Qiming Yuan, Henrique Ponde de Oliveira Pinto, Jared Kaplan, Harri Edwards, Yuri Burda, Nicholas Joseph, Greg Brockman, Alex Ray, Raul Puri, Gretchen Krueger, Michael Petrov, Heidy Khlaaf, Girish Sastry, Pamela Mishkin, Brooke Chan, Scott Gray, Nick Ryder, Mikhail Pavlov, Alethea Power, Lukasz Kaiser, Mohammad Bavarian, Clemens Winter, Philippe Tillet, Felipe Petroski Such, Dave Cummings, Matthias Plappert, Fotios Chantzis, Elizabeth Barnes, Ariel Herbert-Voss, William Hebgen Guss, Alex Nichol, Alex Paino, Nikolas Tezak, Jie Tang, Igor Babuschkin, Suchir Balaji, Shantanu Jain, William Saunders, Christopher Hesse, Andrew N. Carr, Jan Leike, Josh Achiam, Vedant Misra, Evan Morikawa, Alec Radford, Matthew Knight, Miles Brundage, Mira Murati, Katie Mayer, Peter Welinder, Bob McGrew, Dario Amodei, Sam McCandlish, Ilya Sutskever, and Wojciech Zaremba. Evaluating large language models trained on code. arXiv preprint arXiv: Arxiv-2107.03374 , 2021.

- [42] Rui Wang, Joel Lehman, Jeff Clune, and Kenneth O. Stanley. Paired open-ended trailblazer (poet): Endlessly generating increasingly complex and diverse learning environments and their solutions. arXiv preprint arXiv: Arxiv-1901.01753 , 2019.

- [43] Rémy Portelas, Cédric Colas, Lilian Weng, Katja Hofmann, and Pierre-Yves Oudeyer. Automatic curriculum learning for deep RL: A short survey. In Christian Bessiere, editor, Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, IJCAI 2020 , pages 4819–4825. ijcai.org, 2020.

- [44] Sébastien Forestier, Rémy Portelas, Yoan Mollard, and Pierre-Yves Oudeyer. Intrinsically motivated goal exploration processes with automatic curriculum learning. The Journal of Machine Learning Research , 23(1):6818–6858, 2022.

- [45] Kevin Ellis, Catherine Wong, Maxwell Nye, Mathias Sable-Meyer, Luc Cary, Lucas Morales, Luke Hewitt, Armando Solar-Lezama, and Joshua B. Tenenbaum. Dreamcoder: Growing generalizable, interpretable knowledge with wake-sleep bayesian program learning. arXiv preprint arXiv: Arxiv-2006.08381 , 2020.

- [46] Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Ed Chi, Quoc Le, and Denny Zhou. Chain of thought prompting elicits reasoning in large language models. arXiv preprint arXiv: Arxiv-2201.11903 , 2022.

- [47] Volodymyr Mnih, Adrià Puigdomènech Badia, Mehdi Mirza, Alex Graves, Timothy P. Lillicrap, Tim Harley, David Silver, and Koray Kavukcuoglu. Asynchronous methods for deep reinforcement learning. In Maria-Florina Balcan and Kilian Q. Weinberger, editors, Proceedings of the 33nd International Conference on Machine Learning, ICML 2016, New York City, NY, USA, June 19-24, 2016 , volume 48 of JMLR Workshop and Conference Proceedings , pages 1928–1937. JMLR.org, 2016.

- [48] John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. arXiv preprint arXiv: Arxiv-1707.06347 , 2017.

- [49] Timothy P. Lillicrap, Jonathan J. Hunt, Alexander Pritzel, Nicolas Heess, Tom Erez, Yuval Tassa, David Silver, and Daan Wierstra. Continuous control with deep reinforcement learning. In Yoshua Bengio and Yann LeCun, editors, 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, May 2-4, 2016, Conference Track Proceedings , 2016.

- [50] Introducing chatgpt, 2022.

- [51] New and improved embedding model, 2022.

- [52] PrismarineJS. Prismarinejs/mineflayer: Create minecraft bots with a powerful, stable, and high level javascript api., 2013.

- [53] Kolby Nottingham, Prithviraj Ammanabrolu, Alane Suhr, Yejin Choi, Hanna Hajishirzi, Sameer Singh, and Roy Fox. Do embodied agents dream of pixelated sheep?: Embodied decision making using language guided world modelling. ARXIV.ORG , 2023.

- [54] Shaofei Cai, Zihao Wang, Xiaojian Ma, Anji Liu, and Yitao Liang. Open-world multi-task control through goal-aware representation learning and adaptive horizon prediction. arXiv preprint arXiv: Arxiv-2301.10034 , 2023.

- [55] Zihao Wang, Shaofei Cai, Anji Liu, Xiaojian Ma, and Yitao Liang. Describe, explain, plan and select: Interactive planning with large language models enables open-world multi-task agents. arXiv preprint arXiv: Arxiv-2302.01560 , 2023.

- [56] Sébastien Bubeck, Varun Chandrasekaran, Ronen Eldan, Johannes Gehrke, Eric Horvitz, Ece Kamar, Peter Lee, Yin Tat Lee, Yuanzhi Li, Scott Lundberg, Harsha Nori, Hamid Palangi, Marco Tulio Ribeiro, and Yi Zhang. Sparks of artificial general intelligence: Early experiments with gpt-4. arXiv preprint arXiv: Arxiv-2303.12712 , 2023.

- [57] Yiheng Liu, Tianle Han, Siyuan Ma, Jiayue Zhang, Yuanyuan Yang, Jiaming Tian, Hao He, Antong Li, Mengshen He, Zhengliang Liu, Zihao Wu, Dajiang Zhu, Xiang Li, Ning Qiang, Dingang Shen, Tianming Liu, and Bao Ge. Summary of chatgpt/gpt-4 research and perspective towards the future of large language models. arXiv preprint arXiv: Arxiv-2304.01852 , 2023.

- [58] Shikun Liu, Linxi Fan, Edward Johns, Zhiding Yu, Chaowei Xiao, and Anima Anandkumar. Prismer: A vision-language model with an ensemble of experts. arXiv preprint arXiv: Arxiv-2303.02506 , 2023.

- [59] Danny Driess, Fei Xia, Mehdi S. M. Sajjadi, Corey Lynch, Aakanksha Chowdhery, Brian Ichter, Ayzaan Wahid, Jonathan Tompson, Quan Vuong, Tianhe Yu, Wenlong Huang, Yevgen Chebotar, Pierre Sermanet, Daniel Duckworth, Sergey Levine, Vincent Vanhoucke, Karol Hausman, Marc Toussaint, Klaus Greff, Andy Zeng, Igor Mordatch, and Pete Florence. Palm-e: An embodied multimodal language model. arXiv preprint arXiv: Arxiv-2303.03378 , 2023.

- [60] Hugo Touvron, Thibaut Lavril, Gautier Izacard, Xavier Martinet, Marie-Anne Lachaux, Timothée Lacroix, Baptiste Rozière, Naman Goyal, Eric Hambro, Faisal Azhar, Aurelien Rodriguez, Armand Joulin, Edouard Grave, and Guillaume Lample. Llama: Open and efficient foundation language models. arXiv preprint arXiv: Arxiv-2302.13971 , 2023.

- [61] William H. Guss, Brandon Houghton, Nicholay Topin, Phillip Wang, Cayden Codel, Manuela Veloso, and Ruslan Salakhutdinov. Minerl: A large-scale dataset of minecraft demonstrations. In Sarit Kraus, editor, Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI 2019, Macao, China, August 10-16, 2019 , pages 2442–2448. ijcai.org, 2019.

- [62] William H. Guss, Cayden Codel, Katja Hofmann, Brandon Houghton, Noboru Kuno, Stephanie Milani, Sharada Mohanty, Diego Perez Liebana, Ruslan Salakhutdinov, Nicholay Topin, Manuela Veloso, and Phillip Wang. The minerl 2019 competition on sample efficient reinforcement learning using human priors. arXiv preprint arXiv: Arxiv-1904.10079 , 2019.

- [63] William H. Guss, Mario Ynocente Castro, Sam Devlin, Brandon Houghton, Noboru Sean Kuno, Crissman Loomis, Stephanie Milani, Sharada Mohanty, Keisuke Nakata, Ruslan Salakhutdinov, John Schulman, Shinya Shiroshita, Nicholay Topin, Avinash Ummadisingu, and Oriol Vinyals. The minerl 2020 competition on sample efficient reinforcement learning using human priors. arXiv preprint arXiv: Arxiv-2101.11071 , 2021.

- [64] Anssi Kanervisto, Stephanie Milani, Karolis Ramanauskas, Nicholay Topin, Zichuan Lin, Junyou Li, Jianing Shi, Deheng Ye, Qiang Fu, Wei Yang, Weijun Hong, Zhongyue Huang, Haicheng Chen, Guangjun Zeng, Yue Lin, Vincent Micheli, Eloi Alonso, François Fleuret, Alexander Nikulin, Yury Belousov, Oleg Svidchenko, and Aleksei Shpilman. Minerl diamond 2021 competition: Overview, results, and lessons learned. arXiv preprint arXiv: Arxiv-2202.10583 , 2022.

- [65] Matthew Johnson, Katja Hofmann, Tim Hutton, and David Bignell. The malmo platform for artificial intelligence experimentation. In Subbarao Kambhampati, editor, Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, IJCAI 2016, New York, NY, USA, 9-15 July 2016 , pages 4246–4247. IJCAI/AAAI Press, 2016.

- [66] Zichuan Lin, Junyou Li, Jianing Shi, Deheng Ye, Qiang Fu, and Wei Yang. Juewu-mc: Playing minecraft with sample-efficient hierarchical reinforcement learning. arXiv preprint arXiv: Arxiv-2112.04907 , 2021.

- [67] Hangyu Mao, Chao Wang, Xiaotian Hao, Yihuan Mao, Yiming Lu, Chengjie Wu, Jianye Hao, Dong Li, and Pingzhong Tang. Seihai: A sample-efficient hierarchical ai for the minerl competition. arXiv preprint arXiv: Arxiv-2111.08857 , 2021.

- [68] Alexey Skrynnik, Aleksey Staroverov, Ermek Aitygulov, Kirill Aksenov, Vasilii Davydov, and Aleksandr I. Panov. Hierarchical deep q-network from imperfect demonstrations in minecraft. Cogn. Syst. Res. , 65:74–78, 2021.

- [69] Danijar Hafner, Jurgis Pasukonis, Jimmy Ba, and Timothy Lillicrap. Mastering diverse domains through world models. arXiv preprint arXiv: Arxiv-2301.04104 , 2023.

- [70] Ryan Volum, Sudha Rao, Michael Xu, Gabriel DesGarennes, Chris Brockett, Benjamin Van Durme, Olivia Deng, Akanksha Malhotra, and Bill Dolan. Craft an iron sword: Dynamically generating interactive game characters by prompting large language models tuned on code. In Proceedings of the 3rd Wordplay: When Language Meets Games Workshop (Wordplay 2022) , pages 25–43, Seattle, United States, 2022. Association for Computational Linguistics.

- [71] Haoqi Yuan, Chi Zhang, Hongcheng Wang, Feiyang Xie, Penglin Cai, Hao Dong, and Zongqing Lu. Plan4mc: Skill reinforcement learning and planning for open-world minecraft tasks. arXiv preprint arXiv: 2303.16563 , 2023.

- [72] Rishi Bommasani, Drew A. Hudson, Ehsan Adeli, Russ Altman, Simran Arora, Sydney von Arx, Michael S. Bernstein, Jeannette Bohg, Antoine Bosselut, Emma Brunskill, Erik Brynjolfsson, Shyamal Buch, Dallas Card, Rodrigo Castellon, Niladri Chatterji, Annie Chen, Kathleen Creel, Jared Quincy Davis, Dora Demszky, Chris Donahue, Moussa Doumbouya, Esin Durmus, Stefano Ermon, John Etchemendy, Kawin Ethayarajh, Li Fei-Fei, Chelsea Finn, Trevor Gale, Lauren Gillespie, Karan Goel, Noah Goodman, Shelby Grossman, Neel Guha, Tatsunori Hashimoto, Peter Henderson, John Hewitt, Daniel E. Ho, Jenny Hong, Kyle Hsu, Jing Huang, Thomas Icard, Saahil Jain, Dan Jurafsky, Pratyusha Kalluri, Siddharth Karamcheti, Geoff Keeling, Fereshte Khani, Omar Khattab, Pang Wei Koh, Mark Krass, Ranjay Krishna, Rohith Kuditipudi, Ananya Kumar, Faisal Ladhak, Mina Lee, Tony Lee, Jure Leskovec, Isabelle Levent, Xiang Lisa Li, Xuechen Li, Tengyu Ma, Ali Malik, Christopher D. Manning, Suvir Mirchandani, Eric Mitchell, Zanele Munyikwa, Suraj Nair, Avanika Narayan, Deepak Narayanan, Ben Newman, Allen Nie, Juan Carlos Niebles, Hamed Nilforoshan, Julian Nyarko, Giray Ogut, Laurel Orr, Isabel Papadimitriou, Joon Sung Park, Chris Piech, Eva Portelance, Christopher Potts, Aditi Raghunathan, Rob Reich, Hongyu Ren, Frieda Rong, Yusuf Roohani, Camilo Ruiz, Jack Ryan, Christopher Ré, Dorsa Sadigh, Shiori Sagawa, Keshav Santhanam, Andy Shih, Krishnan Srinivasan, Alex Tamkin, Rohan Taori, Armin W. Thomas, Florian Tramèr, Rose E. Wang, William Wang, Bohan Wu, Jiajun Wu, Yuhuai Wu, Sang Michael Xie, Michihiro Yasunaga, Jiaxuan You, Matei Zaharia, Michael Zhang, Tianyi Zhang, Xikun Zhang, Yuhui Zhang, Lucia Zheng, Kaitlyn Zhou, and Percy Liang. On the opportunities and risks of foundation models. arXiv preprint arXiv: Arxiv-2108.07258 , 2021.

- [73] Aakanksha Chowdhery, Sharan Narang, Jacob Devlin, Maarten Bosma, Gaurav Mishra, Adam Roberts, Paul Barham, Hyung Won Chung, Charles Sutton, Sebastian Gehrmann, Parker Schuh, Kensen Shi, Sasha Tsvyashchenko, Joshua Maynez, Abhishek Rao, Parker Barnes, Yi Tay, Noam Shazeer, Vinodkumar Prabhakaran, Emily Reif, Nan Du, Ben Hutchinson, Reiner Pope, James Bradbury, Jacob Austin, Michael Isard, Guy Gur-Ari, Pengcheng Yin, Toju Duke, Anselm Levskaya, Sanjay Ghemawat, Sunipa Dev, Henryk Michalewski, Xavier Garcia, Vedant Misra, Kevin Robinson, Liam Fedus, Denny Zhou, Daphne Ippolito, David Luan, Hyeontaek Lim, Barret Zoph, Alexander Spiridonov, Ryan Sepassi, David Dohan, Shivani Agrawal, Mark Omernick, Andrew M. Dai, Thanumalayan Sankaranarayana Pillai, Marie Pellat, Aitor Lewkowycz, Erica Moreira, Rewon Child, Oleksandr Polozov, Katherine Lee, Zongwei Zhou, Xuezhi Wang, Brennan Saeta, Mark Diaz, Orhan Firat, Michele Catasta, Jason Wei, Kathy Meier-Hellstern, Douglas Eck, Jeff Dean, Slav Petrov, and Noah Fiedel. Palm: Scaling language modeling with pathways. arXiv preprint arXiv: Arxiv-2204.02311 , 2022.

- [74] Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Sharan Narang, Gaurav Mishra, Adams Yu, Vincent Zhao, Yanping Huang, Andrew Dai, Hongkun Yu, Slav Petrov, Ed H. Chi, Jeff Dean, Jacob Devlin, Adam Roberts, Denny Zhou, Quoc V. Le, and Jason Wei. Scaling instruction-finetuned language models. arXiv preprint arXiv: Arxiv-2210.11416 , 2022.

- [75] Jiafei Duan, Samson Yu, Hui Li Tan, Hongyuan Zhu, and Cheston Tan. A survey of embodied AI: from simulators to research tasks. IEEE Trans. Emerg. Top. Comput. Intell. , 6(2):230–244, 2022.

- [76] Dhruv Batra, Angel X. Chang, Sonia Chernova, Andrew J. Davison, Jia Deng, Vladlen Koltun, Sergey Levine, Jitendra Malik, Igor Mordatch, Roozbeh Mottaghi, Manolis Savva, and Hao Su. Rearrangement: A challenge for embodied ai. arXiv preprint arXiv: Arxiv-2011.01975 , 2020.