- Data Structures

- Linked List

- Binary Tree

- Binary Search Tree

- Segment Tree

- Disjoint Set Union

- Fenwick Tree

- Red-Black Tree

- Advanced Data Structures

- Solve Coding Problems

- Maximum number of nodes which can be reached from each node in a graph.

- Shortest Path Properties

- Count the number of walks of length N where cost of each walk is equal to a given number

- Difference Between sum of degrees of odd and even degree nodes in an Undirected Graph

- Find the maximum value permutation of a graph

- Travelling Salesman Problem implementation using BackTracking

- Relabel-to-front Algorithm

- Check if a cycle exists between nodes S and T in an Undirected Graph with only S and T repeating | Set - 2

- Samsung Semiconductor Institute of Research(SSIR Software) intern/FTE | Set-3

- Shortest Path Faster Algorithm

- Find the largest sum of a cycle in the maze

- Push Relabel Algorithm | Set 2 (Implementation)

- Importance of Randomized Algorithms

- Print numbers 1 to N using Indirect recursion

- Largest subset of Graph vertices with edges of 2 or more colors

- Maximum number of groups of size 3 containing two type of items

- Trabb Pardo–Knuth Algorithm

- Determining topology formed in a Graph

- Check whether given degrees of vertices represent a Graph or Tree

Traveling Salesman Problem using Genetic Algorithm

AuPrerequisites: Genetic Algorithm , Travelling Salesman Problem In this article, a genetic algorithm is proposed to solve the travelling salesman problem . Genetic algorithms are heuristic search algorithms inspired by the process that supports the evolution of life. The algorithm is designed to replicate the natural selection process to carry generation, i.e. survival of the fittest of beings. Standard genetic algorithms are divided into five phases which are:

- Creating initial population.

- Calculating fitness.

- Selecting the best genes.

- Crossing over.

- Mutating to introduce variations.

These algorithms can be implemented to find a solution to the optimization problems of various types. One such problem is the Traveling Salesman Problem . The problem says that a salesman is given a set of cities, he has to find the shortest route to as to visit each city exactly once and return to the starting city. Approach: In the following implementation, cities are taken as genes, string generated using these characters is called a chromosome, while a fitness score which is equal to the path length of all the cities mentioned, is used to target a population. Fitness Score is defined as the length of the path described by the gene. Lesser the path length fitter is the gene. The fittest of all the genes in the gene pool survive the population test and move to the next iteration. The number of iterations depends upon the value of a cooling variable. The value of the cooling variable keeps on decreasing with each iteration and reaches a threshold after a certain number of iterations. Algorithm:

Pseudo-code

How the mutation works? Suppose there are 5 cities: 0, 1, 2, 3, 4. The salesman is in city 0 and he has to find the shortest route to travel through all the cities back to the city 0. A chromosome representing the path chosen can be represented as:

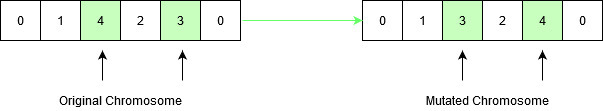

This chromosome undergoes mutation. During mutation, the position of two cities in the chromosome is swapped to form a new configuration, except the first and the last cell, as they represent the start and endpoint.

Original chromosome had a path length equal to INT_MAX , according to the input defined below, since the path between city 1 and city 4 didn’t exist. After mutation, the new child formed has a path length equal to 21 , which is a much-optimized answer than the original assumption. This is how the genetic algorithm optimizes solutions to hard problems.

Below is the implementation of the above approach:

Time complexity: O(n^2) as it uses nested loops to calculate the fitness value of each gnome in the population. Auxiliary Space : O(n)

Please Login to comment...

- WhatsApp To Launch New App Lock Feature

- Top Design Resources for Icons

- Node.js 21 is here: What’s new

- Zoom: World’s Most Innovative Companies of 2024

- 30 OOPs Interview Questions and Answers (2024)

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Traveling Salesman Problem with Genetic Algorithms

17 minute read

The traveling salesman problem (TSP) is a famous problem in computer science. The problem might be summarized as follows: imagine you are a salesperson who needs to visit some number of cities. Because you want to minimize costs spent on traveling (or maybe you’re just lazy like I am), you want to find out the most efficient route, one that will require the least amount of traveling. You are given a coordinate of the cities to visit on a map. How can you find the optimal route?

The most obvious solution would be the brute force method, where you consider all the different possibilities, calculate the estimated distance for each, and choose the one that is the shortest path. While this is a definite way to solve TSP, the issue with this approach is that it requires a lot of compute—the runtime of this brute force algorithm would be $O(n!)$, which is just utterly terrible.

In this post, we will consider a more interesting way to approach TSP: genetic algorithms. As the name implies, genetic algorithms somewhat simulate an evolutionary process, in which the principle of the survival of the fittest ensures that only the best genes will have survived after some iteration of evolutionary cycles across a number of generations. Genetic algorithms can be considered as a sort of randomized algorithm where we use random sampling to ensure that we probe the entire search space while trying to find the optimal solution. While genetic algorithms are not the most efficient or guaranteed method of solving TSP, I thought it was a fascinating approach nonetheless, so here goes the post on TSP and genetic algorithms.

Problem and Setup

Before we dive into the solution, we need to first consider how we might represent this problem in code. Let’s take a look at the modules we will be using and the mode of representation we will adopt in approaching TSP.

The original, popular TSP requires that the salesperson return to the original starting point destination as well. In other words, if the salesman starts at city A, he has to visit all the rest of the cities until returning back to city A. For the sake of simplicity, however, we don’t enforce this returning requirement in our modified version of TSP.

Below are the modules we will be using for this post. We will be using numpy , more specifically a lot of functions from numpy.random for things like sampling, choosing, or permuting. numpy arrays are also generally faster than using normal Python lists since they support vectorization, which will certainly be beneficial when building our model. For reproducibility, let’s set the random seed to 42.

Representation

Now we need to consider the question of how we might represent TSP in code. Obviously, we will need some cities and some information on the distance between these cities.

One solution is to consider adjacency matrices, somewhat similar to the adjacency list we took a look at on the post on Breadth First and Depth First Search algorithms. The simple idea is that we can construct some matrix that represent distances between cities $i$ and $j$ such that $A_{ij}$ represents the distance between those two cities. When $i=j$, therefore, it is obvious that $A_{ii}$ will be zero, since the distance from city $i$ to itself is trivially zero.

Here is an example of some adjacency matrix. For convenience purposes, we will represent cities by their indices.

Genetic Algorithm

Now it’s time for us to understand how genetic algorithms work. Don’t worry, you don’t have to be a biology major to understand this; simple intuition will do.

The idea is that, we can use some sort of randomized approach to generate an initial population, and motivate an evolutionary cycle such that only superior genes will survive successive iterations. You might be wondering what genes are in this context. Most typically, genes can be thought of as some representation of the solution we are trying to find. In this case, an encoding of the optimal path would be the gene we are looking for.

Evolution is a process that finds an optimal solution for survival through competition and mutation. Basically, the genes that have superior traits will survive, leaving offspring into the next generation. Those that are inferior will be unable to find a mate and perish, as sad as it sounds. Then how do these superior or inferior traits occur in the first place? The answer lies in random mutations. The children of one parent will not all have identical genes: due to mutation, which occurs by chance, some will acquire even more superior features that puts them far ahead of their peers. Needless to say, such beneficiaries of positive mutation will survive and leave offspring, carrying onto the next generation. Those who experience adversarial mutation, on the other hand, will not be able to survive.

In genetic algorithm engineering, we want to be able to simulate this process over an extended period of time without hard-coding our solution, such that the end result after hundred or thousands of generations will contain the optimal solution. Of course, we can’t let the computer do everything: we still have to implement mutational procedures that define an evolutionary process. But more on that later. First, let’s begin with the simple task of building a way of modeling a population.

Implementation

First, let’s define a class to represent the population. I decided to go with a class-based implementation to attach pieces of information about a specific generation of population to that class object. Specifically, we can have things like bag to represent the full population, parents to represent th chosen, selected superior few, score to store the score of the best chromosome in the population, best to store the best chromosome itself, and adjacency_mat , the adjacency matrix that we will be using to calculate the distance in the context of TSP.

Here is a little snippet of code that we will be using to randomly generate the first generation of population.

Let’s see if this everything works as expected by generating a dummy population.

Now we need some function that will determine the fitness of a chromosome. In the context of TSP, fitness is defined in very simple terms: the shorter the total distance, the fitter and more superior the chromosome. Recall that all the distance information we need is nicely stored in self.adjacency_mat . We can calculate the sum of all the distances between two adjacent cities in the chromosome sequence.

Next, we evaluate the population. Simply put, evaluation amounts to calculating the fitness of each chromosome in the total population, determining who is best, storing the score information, and returning some probability vector whose each element represents the probability that the i th element in the population bag is chosen as a parent. We apply some basic preprocessing to ensure that the worst performing chromosome has absolutely no chance of being selected.

When we call pop.evaluate() , we get a probability vector as expected. From the result, it appears that the last element is the best chromosome; the second chromosome in the population bag is the worst.

When we call pop.best , notice that we get the last element in the population, as previously anticipated.

We can also access the score of the best chromosome. In this case, the distance is said to be 86.25. Note that the lower the score, the better, since these scores represent the total distance a salesman has to travel to visit all the cities.

Now, we will select k number of parents to be the basis of the next generation. Here, we use a simple roulette model, where we compare the value of the probability vector and a random number sampled from a uniform distribution. If the value of the probability vector is higher, the corresponding chromosome is added to self.parents . We repeat this process until we have k parents.

As expected, we get 4 parents after selecting the parents through pop.select() .

Now is the crucial part: mutation. There are different types of mutation schemes we can use for our model. Here, we use a simple swap and crossover mutation. As the name implies, swap simply involves swapping two elements of a chromosome. For instance, if we have [a, b, c] , we might swap the first two elements to end up with [b, a, c] .

The problem with swap mutation, however, is the fact that swapping is a very disruptive process in the context of TSP. Because each chromosome encodes the order in which a salesman has to visit each city, swapping two cities may greatly impact the final fitness score of that mutated chromosome.

Therefore, we also use another form of mutation, known as crossovers. In crossover mutation, we grab two parents. Then, we slice a portion of the chromosome of one parent, and fill the rest of the slots with that of the other parent. When filling the rest of the slots, we need to make sure that there are no duplicates in the chromosome. Let’s take a look at an example. Imagine one parent has [a, b, c, d, e] and the other has [b, a, e, c, d] . Let’s also say that slicing a random portion of the first parent gave us [None, b, c, None, None] . Then, we fill up the rest of the empty indices with the other parent, paying attention to the order in which elements occur. In this case, we would end up with [a, b, c, e, d] . Let’s see how this works.

Now, we wrap the swap and crossover mutation into one nice function to call so that we perform each mutation according to some specified threshold.

Let’s test it on pop . When we call pop.mutate() , we end up with the population bag for the next generation, as expected.

Now it’s finally time to put it all together. For convenience, I’ve added some additional parameters such as print_interval or verbose , but for the most part, a lot of what is being done here should be familiar and straightforward. The gist of it is that we run a simulation of population selection and mutation over n_iter generations. The key part is children = pop.mutate(p_cross, p_mut) and pop = Population(children, pop.adjacency_mat) . Basically, we obtain the children from the mutation and pass it over as the population bag of the next generation in the Population constructor.

Now let’s test it on our TSP example over 20 generations. As generations pass, the fitness score seems to improve, but not by a lot.

Let’s try running this over an extended period of time, namely 100 generations. For clarity, let’s also plot the progress of our genetic algorithm by setting return_history to True .

After something like 30 iterations, it seems like algorithm has converged to the minimum, sitting at around 86.25. Apparently, the best way to travel the cities is to go in the order of [4, 1, 3, 2, 0] .

Example Applications

But this was more of a contrived example. We want to see if this algorithm can scale. So let’s write some functions to generate city coordinates and corresponding adjacency matrices.

generate_cities() generates n_cities number of random city coordinates in the form of a numpy array. Now, we need some functions that will create an adjacency matrix based on the city coordinates.

Let’s perform a quick sanity check to see if make_mat() works as expected. Here, give vertices of a unit square as input to the function. While we’re at it, let’s also make sure that generate_cities() indeed does create city coordinates as expected.

Now, we’re finally ready to use these functions to randomly generate city coordinates and use the genetic algorithm to find the optimal path using genetic_algorithm() with the appropriate parameters. Let’s run the algorithm for a few iterations and plot its history.

We can see that the genetic algorithm does seems to be optimizing the path as we expect, since the distance metric seems to be decreasing throughout the iteration. Now, let’s actually try plotting the path along with the corresponding city coordinates. Here’s a helper function to print the optimal path.

And calling this function, we obtain the following:

At a glance, it’s really difficult to see if this is indeed the optimal path, especially because the city coordinates were generated at random.

I therefore decided to create a much more contrived example, but with many coordinates, so that we can easily verify whether the path decided on by the algorithm is indeed the optimal path. Namely, we will be arranging city coordinates to lie on a semi-circle, using the very familiar equation

Let’s create 100 such fake cities and run the genetic algorithm to optimize the path. If the algorithm does successfully find an optimal path, it will be a single curve from one end of the semi-circle fully connected all the way up to its other end.

The algorithm seems to have converged, but the returned best does not seem to be the optimal path, as it is not a sorted array from 0 to 99 as we expect. Plotting this result, the fact that the algorithm hasn’t quite found the most optimal solution becomes clearer. This point notwithstanding, it is still worth noting that the algorithm has found what might be referred to as optimal segments: notice that there are some segments of the path that contain consecutive numbers, which is what we would expect to see in the optimal path.

An optimal path would look as follows.

Comparing the two, we see that the optimal path returned by the genetic algorithm does contain some wasted traveling routes, namely the the chords between certain non-adjacent cities. Nonetheless, a lot of the adjacent cities are connected (hence the use of the aforementioned term, optimal segments). Considering the fact that there are a total of $100!$ possibilities, the fact that the algorithm was able to narrow it down to a plausible route that beats the baseline is still very interesting.

Genetic algorithms belong to a larger group of algorithms known as randomized algorithms. Prior to learning about genetic algorithms, the word “randomized algorithms” seemed more like a mysterious black box. After all, how can an algorithm find an answer to a problem using pseudo-random number generators, for instance? This post was a great opportunity to think more about this naive question through a concrete example. Moreover, it was also interesting to think about the traveling salesman problem, which is a problem that appears so simple and easy, belying the true level of difficulty under the surface.

There are many other ways to approach TSP, and genetic algorithms are just one of the many approaches we can take. It is also not the most effective way, as iterating over generations and generations can often take a lot of time. The contrived semi-circle example, for instance, took somewhere around five to ten minutes to fully run on my 13-inch MacBook Pro. Nonetheless, I think it is an interesting way well worth the time and effort spent on implementation.

I hope you’ve enjoyed reading this post. Catch you up in the next one!

You May Also Enjoy

16 minute read

August 20 2023

I recently completed another summer internship at Meta (formerly Facebook). I was surprised to learn that one of the intern friends I met was an avid read...

Hacking Word Hunt

7 minute read

August 21 2022

Update: The code was modified with further optimizations. In particular, instead of checking the trie per every DFS call, we update the trie pointer along...

20 minute read

April 11 2022

Note: This blog post was completed as part of Yale’s CPSC 482: Current Topics in Applied Machine Learning.

Reflections and Expectations

5 minute read

December 27 2021

Last year, I wrote a blog post reflecting on the year 2020. Re-reading what I had written then was surprisingly insightful, particularly because I could see ...

Optimization of Saleman Travelling Problem Using Genetic Algorithm with Combination of Order and Random Crossover

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- Trending Categories

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

Travelling Salesman Problem using Genetic Algorithm

The Travelling Salesman Problem (TSP) finds the shortest path between a collection of cities and the starting point. Due of its combinatorial nature and exponentially increasing number of routes as cities rise, it is a difficult task.The Genetic Algorithm (GA) is a genetically inspired heuristic. Emulating natural selection solves the TSP. The GA uses routes to illustrate prospective city tours.Selection, crossover, and mutation develop the population in the GA. Selection favours pathways with higher fitness, indicating quality or near to the ideal solution. Mutation introduces random modifications to explore new solution spaces, whereas crossover mixes genetic information from parent routes to produce children.

Methods Used

Genetic algorithm.

Natural selection and genetics inspired the strong heuristic Genetic Algorithm (GA). It copies evolution to solve TSP efficiently. Each path in the GA is a possible solution. Fitness determines a route's quality and optimality. The GA develops new populations by selecting, crossing, and mutating routes with higher fitness values.

Define cities, maximum generations, population size, and mutation rate.

Define a city structure with x and y coordinates.

Define a route structure with a vector of city indices (path) and fitness value.

Create a method to determine city distances using coordinates.

Create a random route function by swapping city indices.

Create a function that sums city distances to calculate route fitness.

Create a function to crossover two parent routes to create a child route.

Mutate a route by exchanging cities with a mutation rate−based function.

Implement a fitness−based route finding tool.

Finally, the Genetic Algorithm (GA) solves the Travelling Salesman Problem (TSP) and other combinatorial optimisation issues. The GA iteratively searches a wide search space of viable solutions, improving route fitness and arriving on a good solution using genetics and evolution concepts.Exploration and exploitation balance the GA's TSP management. The GA promotes better pathways and preserves population variety through selection, crossover, and mutation. The GA can effectively search the solution space and avoid local optima.

Related Articles

- Travelling Salesman Problem

- Travelling Salesman Problem (TSP) Using Reduced Matrix Method

- Proof that travelling salesman problem is NP Hard

- C++ Program to Solve Travelling Salesman Problem for Unweighted Graph

- C++ Program to Implement Traveling Salesman Problem using Nearest Neighbour Algorithm

- Python Program to solve Maximum Subarray Problem using Kadane’s Algorithm

- What is a Simple Genetic Algorithm (SGA) in Machine Learning?

- Solution for array reverse algorithm problem JavaScript

- The algorithm problem - Backtracing pattern in JavaScript

- How to Explain Steady State Genetic Algorithm (SSGA) in Machine Learning?

- Producer Consumer Problem using Semaphores

- Combination sum problem using JavaScript

- Mutation Genetic Change

- Travelling Wave

- Lock & Key problem using Hash-map

Kickstart Your Career

Get certified by completing the course

Traveling Salesman Problem with Genetic Algorithms in Java

- Introduction

Genetic algorithms are a part of a family of algorithms for global optimization called Evolutionary Computation , which is comprised of artificial intelligence metaheuristics with randomization inspired by biology.

In the previous article, Introduction to Genetic Algorithms in Java , we've covered the terminology and theory behind all of the things you'd need to know to successfully implement a genetic algorithm.

- Implementing a Genetic Algorithm

To showcase what we can do with genetic algorithms, let's solve The Traveling Salesman Problem (TSP) in Java.

TSP formulation : A traveling salesman needs to go through n cities to sell his merchandise. There's a road between each two cities, but some roads are longer and more dangerous than others. Given the cities and the cost of traveling between each two cities, what's the cheapest way for the salesman to visit all of the cities and come back to the starting city, without passing through any city twice?

Although this may seem like a simple feat, it's worth noting that this is an NP-hard problem. There's no algorithm to solve it in polynomial time. Genetic algorithms can only approximate the solution.

Because the solution is rather long, I'll be breaking it down function by function to explain it here. If you want to preview and/or try the entire implementation, you can find the IntelliJ project on GitHub .

- Genome Representation

First, we need an individual to represent a candidate solution. Logically, for this we'll use a class to store the random generation, fitness function, the fitness itself, etc.

To make it easier to calculate fitness for individuals and compare them, we'll also make it implement Comparable :

Despite using a class, what our individual essentially is will be only one of its attributes. If we think of TSP, we could enumerate our cities from 0 to n-1 . A solution to the problem would be an array of cities so that the cost of going through them in that order is minimized.

For example, 0-3-1-2-0 . We can store that in an ArrayList because the Collections Framework makes it really convenient, but you can use any array-like structure.

The attributes of our class are as follows:

When it comes to constructors we'll make two - one that makes a random genome, and one that takes an already made genome as an argument:

- Fitness Function

You may have noticed that we called the calculateFitness() method to assign a fitness value to the object attribute during construction. The function works by following the path laid out in the genome through the price matrix, and adding up the cost.

The fitness turns out to be the actual cost of taking a certain path. We'll want to minimize this cost, so we'll be facing a minimization problem:

- The Genetic Algorithm Class

The heart of the algorithm will take place in another class, called TravelingSalesman . This class will perform our evolution, and all of the other functions will be contained within it:

- Generation size is the number of genomes/individuals in each generation/population. This parameter is also often called the population size.

- Genome size is the length of the genome ArrayList , which will be equal to the numberOfCities-1 . The two variables are separated for clarity in the rest of the code. This parameter is also often called the chromosome length.

- Reproduction size is the number of genomes who'll be selected to reproduce to make the next generation. This parameter is also often called the crossover rate.

- Max iteration is the maximum number of generations the program will evolve before terminating, in case there's no convergence before then.

- Mutation rate refers to the frequency of mutations when creating a new generation.

- Travel prices is a matrix of the prices of travel between each two cities - this matrix will have 0s on the diagonal and symmetrical values in its lower and upper triangle.

- Starting city is the index of the starting city.

- Target fitness is the fitness the best genome has to reach according to the objective function (which will in our implementation be the same as the fitness function) for the program to terminate early. Sometimes setting a target fitness can shorten a program if we only need a specific value or better. Here, if we want to keep our costs below a certain number, but don't care how low exactly, we can use it to set that threshold.

- Tournament size is the size of the tournament for tournament selection.

- Selection type will determine the type of selection we're using - we'll implement both roulette and tournament. Here's the enum for SelectionType :

Although the tournament selection method prevails in most cases, there are situations where you'd want to use other methods. Since a lot of genetic algorithms use the same codebase (the individuals and fitness functions change), it's good practice to add more options to the algorithm.

We'll be implementing both roulette and tournament selection:

The crossover for TSP is atypical. Because each genome is a permutation of the list of cities, we can't just crossover two parents conventionally. Look at the following example (the starting city 0 is implicitly the first and last step):

2-4-3|1-6-5

4-6-5|3-1-2

What would happen if we crossed these two at the point denoted with a | ?

2-4-3-3-1-2

Check out our hands-on, practical guide to learning Git, with best-practices, industry-accepted standards, and included cheat sheet. Stop Googling Git commands and actually learn it!

4-6-5-1-6-5

Uh-oh. These don't go through all the cities and they visit some cities twice, violating multiple conditions of the problem.

So if we can't use conventional crossover, what do we use?

The technique we'll be using is called Partially Mapped Crossover or PMX for short. PMX randomly picks one crossover point, but unlike one-point crossover it doesn't just swap elements from two parents, but instead swaps the elements within them. I find that the process is most comprehensible from an illustration, and we can use the example we've previously had trouble with:

As can be seen here, we swap the i th element of one of the parents with the element equivalent in value to the i th element of the other. By doing this, we preserve the properties of permutations. We repeat this process to create the second child as well (with the original values of the parent genomes):

Mutation is pretty straightforward - if we pass a probability check we mutate by swapping two cities in the genome. Otherwise, we just return the original genome:

- Generation Replacement Policies

We're using a generational algorithm, so we make an entirely new population of children:

- Termination

We terminate under the following conditions:

- the number of generations has reached maxIterations

- the best genome's path length is lower than the target path length

- Running time

The best way to evaluate if this algorithm works properly is to generate some random problems for it and evaluate the run-time:

Our average running time is 51972ms, which is about 52 seconds. This is when the input is four cities long, meaning we'd have to wait longer for larger numbers of cities. This may seem like a lot, but implementing a genetic algorithm takes significantly less time than coming up with a perfect solution for a problem.

While this specific problem could be solved using another method, certain problems can't.

For an example, NASA used a genetic algorithm to generate the optimal shape of a spacecraft antenna for the best radiation pattern.

- Genetic Algorithms for Optimizing Genetic Algorithms?

As an interesting aside, genetic algorithms are sometimes used to optimize themselves. You create a genetic algorithm which runs another genetic algorithm, and rates its execution speed and output as its fitness and adjusts its parameters to maximize performance.

A similar technique is used in NeuroEvolution of Augmenting Topologies , or NEAT, where a genetic algorithm is continuously improving a neural network and hinting how to change structure to accommodate new environments.

Genetic algorithms are a powerful and convenient tool. They may not be as fast as solutions crafted specifically for the problem at hand, and we may not have much in the way of mathematical proof of their effectiveness, but they can solve any search problem of any difficulty, and are not too difficult to master and apply. And as a cherry on the top, they're endlessly fascinating to implement when you think of the evolutionary processes they're based on and how you're a mastermind behind a mini-evolution of your own.

If you want to play further with TSP implemented in this article, this is a reminder that you can find it on GitHub . It has some handy functions for printing out generations, travel costs, generating random travel costs for a given number of cities, etc. so you can test out how it works on different sizes of input, or even meddle with the attributes such as mutation rate, tournament size, and similar.

You might also like...

- Introduction to Genetic Algorithms in Java

- Graphs in Java: Representing Graphs in Code

- Bubble Sort in Java

- Simulated Annealing Optimization Algorithm in Java

- Binary Search in Java

Improve your dev skills!

Get tutorials, guides, and dev jobs in your inbox.

No spam ever. Unsubscribe at any time. Read our Privacy Policy.

In this article

Breast Cancer Classification with Deep Learning - Keras and Tensorflow

As Data Scientists and Machine Learning Engineers - we're exploring prospects of applying Machine Learning algorithms to various domains and extracting knowledge from data. Fast,...

Building Your First Convolutional Neural Network With Keras

Most resources start with pristine datasets, start at importing and finish at validation. There's much more to know. Why was a class predicted? Where was...

© 2013- 2024 Stack Abuse. All rights reserved.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Comput Intell Neurosci

- v.2017; 2017

Genetic Algorithm for Traveling Salesman Problem with Modified Cycle Crossover Operator

Abid hussain.

1 Department of Statistics, Quaid-i-Azam University, Islamabad, Pakistan

Yousaf Shad Muhammad

M. nauman sajid.

2 Department of Computer Science, Foundation University, Islamabad, Pakistan

Ijaz Hussain

Alaa mohamd shoukry.

3 Arriyadh Community College, King Saud University, Riyadh, Saudi Arabia

4 KSA Workers University, El Mansoura, Egypt

Showkat Gani

5 College of Business Administration, King Saud University, Muzahimiyah, Saudi Arabia

Genetic algorithms are evolutionary techniques used for optimization purposes according to survival of the fittest idea. These methods do not ensure optimal solutions; however, they give good approximation usually in time. The genetic algorithms are useful for NP-hard problems, especially the traveling salesman problem. The genetic algorithm depends on selection criteria, crossover, and mutation operators. To tackle the traveling salesman problem using genetic algorithms, there are various representations such as binary, path, adjacency, ordinal, and matrix representations. In this article, we propose a new crossover operator for traveling salesman problem to minimize the total distance. This approach has been linked with path representation, which is the most natural way to represent a legal tour. Computational results are also reported with some traditional path representation methods like partially mapped and order crossovers along with new cycle crossover operator for some benchmark TSPLIB instances and found improvements.

1. Introduction

Genetic algorithms (GAs) are derivative-free stochastic approach based on biological evolutionary processes proposed by Holland [ 1 ]. In nature, the most suitable individuals are likely to survive and mate; therefore, the next generation should be healthier and fitter than previous one. A lot of work and applications have been done about GAs in a frequently cited book by Golberg [ 2 ]. GAs work with population of chromosomes that are represented by some underlying parameters set codes.

The traveling salesman problem (TSP) is one of the most famous benchmarks, significant, historic, and very hard combinatorial optimization problem. TSP was documented by Euler in 1759, whose interest was in solving the knight's tour problem [ 3 ]. It is the fundamental problem in the fields of computer science, engineering, operations research, discrete mathematics, graph theory, and so forth. TSP can be described as the minimization of the total distance traveled by touring all cities exactly once and return to depot city. The traveling salesman problems (TSPs) are classified into two groups on the basis of the structure of the distance matrix as symmetric and asymmetric. The TSP is symmetric if c ij = c ji , ∀ i , j , where i and j represent the row and column of a distance (cost) matrix, respectively, otherwise asymmetric. For n cities, there are ( n − 1)! possible ways to find the tour after fixing the starting city for asymmetric and its half for symmetric TSP. If we have only 10 cities, then there are 362,880 and 181,440 ways for asymmetric and symmetric TSP, respectively. This is the reason to say TSP is NP-hard problem. TSP has many applications such as variety of routing and scheduling problems, computer wiring, and movement of people, X-ray crystallography [ 4 ], and automatic drilling of printed circuit boards and threading of scan cells in a testable Very-Large-Scale-Integrated (VLSI) circuits [ 5 ].

Over the last three decades, TSP received considerable attention and various approaches are proposed to solve the problem, such as branch and bound [ 6 ], cutting planes [ 7 ], 2-opt [ 8 ], particle swarm [ 9 ], simulated annealing [ 10 ], ant colony [ 11 , 12 ], neural network [ 13 ], tabu search [ 14 ], and genetic algorithms [ 3 , 15 – 17 ]. Some of these methods are exact, while others are heuristic algorithms. A comprehensive study about GAs approaches are successfully applied to the TSP [ 18 ]. A survey of GAs approaches for TSP was presented by Potvin [ 17 ]. A new sequential constructive crossover generates high quality solution to the TSP by Ahmed [ 19 ]. A new genetic algorithm for asymmetric TSP is proposed by Nagata and Soler [ 20 ]. Three new variations for order crossover are presented with improvements by Deep and Adane [ 21 ]. Ghadle and Muley presented modified one's algorithm with MATLAB programming to solve TSP [ 22 ]. Piwonska associated a profit based genetic algorithm with TSP and obtained good results to be tested on networks of cities in some voivodeships of Poland [ 23 ]. Kumar et al. presented the comparative analysis of different crossover operators for TSP and showed partially mapped crossover gives shortest path [ 24 ]. A simple and pure genetic algorithm can be defined in the following steps.

Create an initial population of P chromosomes.

Evaluate the fitness of each chromosome.

Choose P/2 parents from the current population via proportional selection.

Randomly select two parents to create offspring using crossover operator.

Apply mutation operators for minor changes in the results.

Repeat Steps 4 and 5 until all parents are selected and mated.

Replace old population of chromosomes with new one.

Evaluate the fitness of each chromosome in the new population.

Terminate if the number of generations meets some upper bound; otherwise go to Step 3.

The selection criteria, crossover, and mutation are major operators, but crossover plays a vital role in GAs. A lot of crossover operators have been proposed in literature and all have their significant importance. In this article, we also proposed a new crossover operator for TSP which is moved within two selected parents as previous cycle crossover operator. In Section 2 , we present crossover operators for TSP and proposed a new crossover operator for path representation in Section 3 ; computational experiments and discussion are in Section 4 and conclusion is in Section 5 .

2. Crossover Operators for TSP

In literature, there are many representations to solve the TSP using the GAs. Among these binary, path, adjacency, ordinal, and matrix representations are important. The further types of these representations are given in Table 1 . We are limiting our self only with the path representation which is most natural and legal way to represent a tour and skip the others representations.

Summary of crossover operators for TSP.

2.1. Path Representation

The most natural way to present a legal tour is probably by using path representation. For example, a tour 1 → 4 → 8 → 2 → 5 → 3 → 6 → 7 can be represented simply as (1 4 8 2 5 3 6 7).

Since the TSPs in combinatorial with the path representation and the classical crossover operators such as one-point, two-point, and uniform crossovers are not suitable, we choose only partially mapped, order, and cycle crossover operators from path representation which are mostly used in literature and also we can compare our proposed crossover operator with these operators.

2.1.1. Partially Mapped Crossover Operator

The partially mapped crossover (PMX) was proposed by Goldberg and Lingle [ 25 ]. After choosing two random cut points on parents to build offspring, the portion between cut points, one parent's string is mapped onto the other parent's string and the remaining information is exchanged. Consider, for example, the two parents tours with randomly one cut point between 3rd and 4th bits and other cut point between 6th and 7th bits are as follows (the two cut points marked with “∣”):

The mapping sections are between the cut points. In this example, the mapping systems are 2↔1, 7↔6, and 1↔8. Now two mapping sections are copied with each other to make offspring as follows:

Then we can fill further bits (from the original parents), for those which have no conflict as follows:

Hence, the first × in the first offspring is 8 which comes from first parent but 8 is already in this offspring, so we check mapping 1↔8 and see again 1 existing in this offspring, again check mapping 2↔1, so 2 occupies at first ×. Similarly, the second × in first offspring is 6 which comes from first parent but 6 exists in this offspring; we check mapping 7↔6 as well, so 7 occupies at second ×. Thus the offspring 1 is

Analogously, we complete second offspring as well:

2.1.2. Order Crossover Operator

The order crossover (OX) was proposed by Davis [ 26 ]. It builds offspring by choosing a subtour of a parent and preserving the relative order of bits of the other parent. Consider, for example, the two parents tours are as follows (with randomly two cut points marked by “∣”):

The offspring are produced in the following way. First, the bits are copied down between the cuts with similar way into the offspring, which gives

After this, starting from the second cut point of one parent, the bits from the other parent are copied in the same order omitting existing bits. The sequence of the bits in the second parent from the second cut point is “3 → 7 → 4 → 2 → 5 → 1 → 6 → 8.” After removal of bits 2, 7, and 1, which are already in the first offspring, the new sequence is “3 → 4 → 5 → 6 → 8.” This sequence is placed in the first offspring starting from the second cut point:

2.1.3. Cycle Crossover Operator

The cycle crossover (CX) operator was first proposed by Oliver et al. [ 27 ]. Using this technique to create offspring in such a way that each bit with its position comes from one of the parents. For example, consider the tours of two parents:

Now it is up to us how we choose the first bit for the offspring to be either from the first or from the second parent. In our example, the first bit of the offspring has to be 1 or 8. Let us choose it be 1:

Now every bit in the offspring should be taken from one of its parents with the same position, it means that further we do not have any choice, so the next bit to be considered must be bit 8, as the bit from the second parent is just below the selected bit 1. In first parent this bit is at 8th position; thus

This turnout implies bit 7, which is the bit of second parent just below the selected bit at 7th position in first parent. Thus

Next, this forced us to put 4 at 4th position as

After this, 1 comes which is already in the list; thus we have completed a cycle and filling the remaining blank positions with the bits of those positions which are in second parent:

Similarly the second offspring is

But there is a drawback that sometimes this technique produces same offspring, for example, the following two parents:

After applying CX technique, the resultant offspring are as follows:

which are the exactly the same as their parents.

3. Proposed Crossover Operators

We are going to propose a new crossover operator which works similarly as CX, so we suggest it as CX2. At the same time it generates both offspring from parents using cycle(s) till last bit. We differentiate CX2 in the following steps.

Choose two parents for mating.

Select 1st bit from second parent as a 1st bit of first offspring.

The selected bit from Step 2 would be found in first parent and pick the exact same position bit which is in second parent and that bit would be found again in the first parent and, finally, the exact same position bit which is in second parent will be selected for 1st bit of second offspring.

The selected bit from Step 3 would be found in first parent and pick the exact same position bit which is in second parent as the next bit for first offspring. (Note: for the first offspring, we choose bits only with one move and two moves for second offspring's bits.)

Repeat Steps 3 and 4 till 1st bit of first parent will not come in second offspring (complete a cycle) and process may be terminated.

If some bits are left, then the same bits in first parent and in second offspring till now and vice versa are left out from both parents. For remaining bits repeat Steps 2, 3, and 4 to complete the process.

According to the previous steps, we derive two cases for CX2. First case of CX2 will be terminated within Step 5 and second will take all six steps. We provide detailed examples of both cases in next subsections.

3.1. CX2: Case 1

Consider the two selected parents as mentioned in Step 1:

Using Step 2,

As using Step 3 which selected 4 in Step 2, where 4 is found at second position in first parent and the bit at this position in second parent is 2. For searching again, 2 is at fourth position in first parent and 1 is at same position in second parent, so 1 is selected for second offspring as follows:

To follow Step 4, the previous bit was 1 and it is located at 6th position in first parent and at this position bit is 8 in second parent, so

And for two moves below 8 is 5 and below 5 is 7, so

Hence similarly,

We see that the last bit of second offspring is 3 which was the 1st bit of first parent. Hence proposed scheme is over within one cycle.

3.2. CX2: Case 2

Now using Step 2 of the scheme,

After this, Step 3 calls us to locate the position of bit 2 in first parent, which is at 2nd and 7 is at same position in second parent, again searching bit 7 in first parent and located at 7th position and 6 is at the same position in second parent, so we choose bit 6 for second offspring:

Continue Steps 4 and 5 as well:

The Step 5 is finished because bit 1 has come in second offspring which was in 1st position of first parent. Now before applying Step 6, we match first offspring's bits with second parent or vice versa and leave out the existing bits with their position in both parents as follwos:

Now filled positions of parents and “×” positions of offspring are considered 1st, 2nd, and 3rd positions, and so forth, so we can complete Step 6 as well:

Hence the scheme is over with efficient work.

To apply this crossover operator, we made a MATLAB code for genetic algorithms and have given pseudo-code in Algorithm 1 .

Pseudo-code of proposed algorithm CX2.

4. Computational Experiments and Discussion

We use genetic algorithm in MATLAB software to compare the proposed crossover operator with some traditional path representation crossover operators. Our first experiment has 7 cities and we impose the transition distance between cities in Table 2 . To solve this problem using GAs, the genetic parameters are set as population size, M = 30; maximum generation, G = 10; crossover probability, P c = 0.8; mutation probability, P m = 0.1. In this experiment, the optimal path and optimal value are 6 → 1 → 5 → 3 → 4 → 2 → 7 and 159, respectively.

Transition distance between cities.

Table 3 summarizes the results and shows that the performance of CX2 is much better than the two existing crossover operators with 30 runs.

Comparison of three crossover operators (30 runs).

4.1. Benchmark Problems

We perform the proposed crossover operator CX2 along two traditional crossover operators PMX and OX on twelve benchmark instances which are taken from the TSPLIB [ 28 ]. In these twelve problems, the ftv33, ftv38, ft53, kro124p, ftv170, rbg323, rbg358, rbg403, and rbg443, are asymmetric and gr21, fri26, and dantzig42 are symmetric TSPs. The experiments are performed 30 times (30 runs) for each instance. The common parameters are selected for GAs, that is, population size, maximum generation, crossover, and mutation probabilities are 150, 500, 0.80, and 0.10, respectively, for less than 100 size instances and results describes in Table 4 . Only two changes for more than 100 size instances, that is, population size and maximum generation are 200 and 1000, respectively, and results are described in Table 5 . Both tables demonstrate comparison of proposed crossover operator with two existing crossover operators with best, worst, and average results. These results show that the solution quality of the proposed algorithm and with existing crossovers operators are insensitive to the number of runs but number of generations are sensitive, especially in Table 5 .

Comparison results among three crossover operators.

Generation = 500.

Generation = 1000.

In Table 4 , CX2 is performing with other two operators, for instance, gr21 and ftv33, on average basis. Proposed operator gives best average results for instances fri26, ftv38, dantzig42, and ft53. For instance dantzig42, the proposed operator CX2, gives exact optimum value (best known value) sixteen out of thirty times. But for this instance, PMX and OX do not give us an exact value for any run and also we found that the best tour generated with CX2 is 75 and 86 percent shorter than the OX and PMX best tours, respectively. For instance ft53, we found 22 and 26 percent shorter distance than PMX and OX best tours respectively. More interesting aspect about CX2 is that the worst values of dantzig42 and ft53 are much better than others best values.

In Table 5 , the results show that all crossover operators work on similar pattern and also found less variations among best, worst, and average values. PMX and OX perform slightly better than CX2 in the instance for rbg323 and for all others instances; the performance of CX2 falls between other two operators except ftv170. For instance, for ftv170, CX2 performs much better than other two with 108 and 137 percent shorter distance of best tours values from PMX and OX, respectively.

Finally, the overall results summarize that the proposed approach CX2 performs better on some aspects similar to existing PMX and OX crossover operators.

5. Conclusion

Various crossover operators have been presented for TSP with different applications by using GAs. The PMX and OX along with proposed crossover operator CX2 are mainly focused in this article. At first, we apply these three operators on a manual experiment and found that CX2 performs better than PMX and OX crossovers. Also, for a global performance, we take twelve benchmark instances from the TSPLIB (traveling salesman problem library). We apply all three crossover operators on these benchmark problems. We observe that the proposed operator works over 20, 70, and 100 percent for ft53, dantzig42, and ftv170 problems, respectively, compared to the other two operators. We see that, for large number of instances, the proposed operator CX2 is performing much better than PMX and OX operators. We suggest that CX2 may be a good candidate to get accurate results or may be a fast convergent. Moreover, researchers will be more confident to use it for comparisons.

Acknowledgments

The authors extend their appreciation to the Deanship of Scientific Research at King Saud University for funding this work through Research Group no. RG-1437-027.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

Paper Information

- Paper Submission

Journal Information

- About This Journal

- Editorial Board

- Current Issue

- Author Guidelines

Electrical and Electronic Engineering

p-ISSN: 2162-9455 e-ISSN: 2162-8459

2020; 10(2): 27-31

doi:10.5923/j.eee.20201002.02

Received: Nov. 28, 2020; Accepted: Dec. 20, 2020; Published: Dec. 28, 2020

Solving Traveling Salesman Problem by Using Genetic Algorithm

Ripon Sorma 1 , Md. Abdul Wadud 1 , S. M. Rezaul Karim 1 , F. A. Sabbir Ahamed 2

1 Department of EEE, International University of Business Agriculture and Technology, Uttara Dhaka, Bangladesh

2 Department of Physics, International University of Business Agriculture and Technology, Uttara Dhaka, Bangladesh

Copyright © 2020 The Author(s). Published by Scientific & Academic Publishing.

This analysis investigated the appliance of the Genetic algorithmic rule capable of finding the representative drawback. Genetic Algorithms square measure able to generate in turn shorter possible tours by victimization info accumulated among the type of a secretion path deposited on the perimeters of the representative drawback graph. pc Simulations demonstrate that the Genetic algorithmic rule is capable of generating batter solutions to each bilaterally symmetric and uneven instances of the representative drawback. the tactic is Associate in Nursing example, like simulated tempering Associate in Nursing organic process computation of the productive use of a natural figure to vogue an optimization algorithmic rule. A study of the genetic algorithmic rule explains its performance and shows that it's about to be seen as a parallel variation of tabu search, with implicit memory. The genetic algorithmic rule is that the best in machine time however least economical in memory consumption. The Genetic algorithmic rule differs from the nearest neighborhood heuristic in that it considers the closest route of the neigh-boyhood heuristic considers the closest path. The Genetic algorithmic rule needs a system with parallel design and its optimum implementation. The activities of every genetic algorithmic rule ought to be run as a separate OS method.

Keywords: Genetic Algorithm, Fuzzy system, Machine learning, Applying Genetic Algorithm, Mat Lab work

Cite this paper: Ripon Sorma, Md. Abdul Wadud, S. M. Rezaul Karim, F. A. Sabbir Ahamed, Solving Traveling Salesman Problem by Using Genetic Algorithm, Electrical and Electronic Engineering , Vol. 10 No. 2, 2020, pp. 27-31. doi: 10.5923/j.eee.20201002.02.

Article Outline

1. introduction, 2. genetic algorithm, 2.1. control parameters, 2.2. fuzzy system, 2.3. neural network, 2.4. machine learning, 3. literatures review, 4. research methodology, 5. result and discussion, 6. conclusions.

First International Conference on Real Time Intelligent Systems

RTIS 2023: Advances in Real-Time Intelligent Systems pp 84–91 Cite as

Optimization of Logistics Distribution Route Based on Improved Genetic Algorithm

- Juan Li 12

- Conference paper

- First Online: 24 March 2024

Part of the book series: Lecture Notes in Networks and Systems ((LNNS,volume 950))

Through research, we found that using the TSP (Traveling Salesman Problem) algorithm to optimize logistics distribution routes can significantly reduce transportation costs, thereby achieving better transportation results. Therefore, we conceived a new genetic algorithm to implement different transportation strategies according to different needs, such as sequences, minimum cost trees, random point lengths, etc., to achieve better transportation results. After in-depth simulation and analysis, we found that the new genetic algorithm performs superior in searching and predicting in complex environments, and its convergence speed is much faster. Therefore, it can be an efficient logistics distribution route optimization technique.

- Optimization of logistics distribution routes

- Genetic algorithm

- Minimizing costs

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Zhang, J., Yu, M., Feng, Q., et al.: Data-driven robust optimization for solving the heterogeneous vehicle routing problem with customer demand uncertainty. Complexity 2021 (1), 1–19 (2021)

Google Scholar

Abudureheman, A., Nilupaer, A.: Optimization model design of cross-border e-commerce transportation path under the background of prevention and control of COVID-19 pneumonia. Soft. Comput.Comput. 25 , 12007–12015 (2021)

Article Google Scholar

He, X., Meng, S., Liang, J.: Analysis of cross-border E-Commerce logistics model based on embedded system and genetic algorithm. Microprocess. Microsyst.. Microsyst. 82 , 103827 (2021)

Wang, C.L., Wang, Y., Zeng, Z.Y., et al.: Heuristic genetic algorithm-based logistics distribution vehicle scheduling: a study. Complexity 2021 (11), 1–8 (2021)

Li, D., Cao, Q., Zuo, M., et al.: Optimization of green fresh food logistics with heterogeneous fleet vehicle route problem by improved genetic algorithm. Sustainability 12 (5), 1946 (2020)

Patidar, A., Sharma, M., Agrawal, R., et al.: Supply chain resilience and its key performance indicators: an evaluation under Industry 4.0 and sustainability perspective. Manag. Environ. Qual. Int. J. 34 (4), 962–980 (2023)

Su, J., Wang, Y., Zhang, S., Gao, X. et al.: Optimization of maximum completion time of polymerization section based on improved estimation of distribution algorithm. Comput. Aided Chem. Eng. 49 , 529–534 (2022)

Wu, F.: Optimization of contactless distribution paths, based on an improved ant colony algorithm. Math. Probl. Eng.Probl. Eng. 2021 (7), 1–11 (2021)

Qu, S., Hu, Y., Zhang, L., et al.: Presented an advanced optimization technique for materials distribution, utilizing spatiotemporal clustering in automobile assembly lines, in their publication. Procedure CIRP 97 (1), 241–246 (2021)

Gao, K., Yang, G., Sun, X.: Enterprise distribution routing optimization with soft time windows based on genetic algorithm. J. Comput. Methods Sci. Eng.Comput. Methods Sci. Eng. 21 (3), 775–785 (2021)

Khademolghorani, F.: The imperialist competitive algorithm for automated mining of association rules. J. Digit. Inf. Manag. 19 (4), 135–(2021)

Zhang, X.: Simulation experiment on optimization of new energy customer value model based on genetic algorithm. J. Intell. Comput. 14 (3), 69–77 (2023)

Download references

Author information

Authors and affiliations.

Henan Institute of Economics and Trade, Zhengzhou, 450046, Henan, China

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Juan Li .

Editor information

Editors and affiliations.

Digital Information Research Labs, Chennai, Tamil Nadu, India

Pit Pichappan

Jan Evangelista Purkyne University, Ústí nad Labem, Czech Republic

Ricardo Rodriguez Jorge

National Taiwan Ocean University, Keelung, Taiwan

Yao-Liang Chung

Rights and permissions

Reprints and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper.

Li, J. (2024). Optimization of Logistics Distribution Route Based on Improved Genetic Algorithm. In: Pichappan, P., Rodriguez Jorge, R., Chung, YL. (eds) Advances in Real-Time Intelligent Systems. RTIS 2023. Lecture Notes in Networks and Systems, vol 950. Springer, Cham. https://doi.org/10.1007/978-3-031-55848-1_10

Download citation

DOI : https://doi.org/10.1007/978-3-031-55848-1_10

Published : 24 March 2024

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-55847-4

Online ISBN : 978-3-031-55848-1

eBook Packages : Intelligent Technologies and Robotics Intelligent Technologies and Robotics (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

IMAGES

VIDEO

COMMENTS

Learn how to use genetic algorithms to solve the traveling salesman problem, which is finding the shortest route to visit all cities once. See the algorithm, pseudo-code, implementation and output in C++ and Python.

After something like 30 iterations, it seems like algorithm has converged to the minimum, sitting at around 86.25. Apparently, the best way to travel the cities is to go in the order of [4, 1, 3, 2, 0]. Example Applications. But this was more of a contrived example. We want to see if this algorithm can scale.

Learn how to use a genetic algorithm (GA) to solve the traveling salesman problem (TSP), a classic optimization problem. Follow the steps of creating, selecting, breeding, mutating and repeating the population until the optimal solution is found.

Chen and Chen [17] propose a genetic algorithm in which a two-part chromosome encoding strategy is employed in mutation and recombination operators, giving the solution of the MTSP. This two-part chromosome encoding takes care of the ordering of cities and the number of cities to be visited by each salesman.

The so called traveling salesman problem is a very well known challenge. The task is to find the shortest overall route between many destinations: saleswoman visits several stores in succession and returns to the starting point in the overall shortest distance at the end. ... We are going to implement a genetic algorithm to solve this problem ...

A new crossover technique to improve genetic algorithm and its application to TSP. 2019 International Conference on Electrical, Computer and Communication Engineering (ECCE), IEEE. Google Scholar Cross Ref; Alhanjouri, M. A. and B. Alfarra (2013). "Ant colony versus genetic algorithm based on travelling salesman problem." Int. J. Comput. Tech.

This paper presents a study of using genetic algorithm (GA) to solve the traveling salesman problem (TSP), a classic optimization problem. It compares the performance of different parameters in GA and proposes some improvements to enhance the algorithm.

This paper addresses an application of genetic algorithms (GA) for solving the travelling salesman problem (TSP), it compares the results of implementing two different types of two-point (1 order) genes crossover, the static and the dynamic approaches, which are used to produce new offspring. By changing three factors; the number of cities, the number of generations and the population size ...

Traveling salesman problem (TSP) is a problem of determining the shortest path for a salesman to take to visit all cities. Although a small number of cities is easy to solve, as the number of cities increases, it's not possible to solve in polynomial time as it was a combinatorial nondeterministic polynomial (NP-hard) problem. Hence, this project is implementing a genetic algorithm (GA) to ...

This paper presents a genetic algorithm (GA) with edge swapping (ES) to solve the TSP. The GA uses local and global versions of ES to generate and improve solutions, and outperforms the state-of-the-art LK-based algorithms on benchmark instances.

A strategy to find the nearly optimized solution to these type of problems, using new crossover technique for genetic algorithm that generates high quality solution to the TSP is presented. This paper includes a flexible method for solving the travelling salesman problem using genetic algorithm. In this problem TSP is used as a domain.TSP has long been known to be NP-complete and standard ...

The Genetic Algorithm and The Travelling Salesman Problem(TSP) So far all of my blog posts have revolved around widgets, flutter, Open Source, and software development. But this time I am taking a…

The Travelling Salesman Problem (TSP) finds the shortest path between a collection of cities and the starting point. Due of its combinatorial nature and exponentially increasing number of routes as cities rise, it is a difficult task.The Genetic Algorithm (GA) is a genetically inspired heuristic. Emulating natural selection solves the TSP.

Implementing a Genetic Algorithm. To showcase what we can do with genetic algorithms, let's solve The Traveling Salesman Problem (TSP) in Java. TSP formulation: A traveling salesman needs to go through n cities to sell his merchandise. There's a road between each two cities, but some roads are longer and more dangerous than others.

3. Solution approach. In this section, we describe in detail the proposed genetic algorithm to solve the travelling salesman problem. The motivation behind using Genetic Algorithms (GAs) is that they are simple and powerful optimization techniques to solve NP-hard problems.GAs start with a population of feasible solutions to an optimization problem and apply iteratively different operators to ...

Abstract. Genetic algorithms are evolutionary techniques used for optimization purposes according to survival of the fittest idea. These methods do not ensure optimal solutions; however, they give good approximation usually in time. The genetic algorithms are useful for NP-hard problems, especially the traveling salesman problem.

A parallel ensemble of Genetic Algorithms for the Traveling Salesman Problem (TSP) is proposed. Different TSP solvers perform efficiently on different instance types. ... and Bryce D. Eldridge. 2005. A Study of Five Parallel Approaches to a Genetic Algorithm for the Traveling Salesman Problem. Intell. Autom. Soft Comput. 11 (2005), 217--234 ...

This paper is the result of a literature study carried out by the authors. It is a review of the different attempts made to solve the Travelling Salesman Problem with Genetic Algorithms. We present crossover and mutation operators, developed to tackle the Travelling Salesman Problem with Genetic Algorithms with different representations such as: binary representation, path representation ...

This paper is a survey of genetic algorithms for the traveling salesman problem. Genetic algorithms are randomized search techniques that simulate some of the processes observed in natural evolution. In this paper, a simple genetic algorithm is introduced, and various extensions are presented to solve the traveling salesman problem. Computational results are also reported for both random and ...

Genetic algorithms are a class of algorithms that take inspiration from genetics. More specifically, "genes" evolve over several iterations by both crossover (reproduction) and mutation. This will get a bit incest-y, but bear with me. In the simplest case, we start with two genes, these genes interact (crossover) where a new gene is ...

The genetic algorithmic rule is employed for the aim of improving the answer house. The crossover is that the necessary stage within the genetic algorithm. Naveen (2012) have done the survey on the traveling salesman downside using varied genetic algorithmic rule operators. The projected work solves the motion salesman downside exploitation ...

Nov 15, 2017. 5. This week we were challenged to solve The Travelling Salesman Problem using a genetic algorithm. The exact application involved finding the shortest distance to fly between eight ...

An improved hybrid genetic algorithm is presented to solve the two-dimensional Eucli-dean traveling salesman problem (TSP), in which the crossover operator is enhanced with a local search, to enhance the convergence and the possibility of escaping from the local optima. We present an improved hybrid genetic algorithm to solve the two-dimensional Eucli-dean traveling salesman problem (TSP), in ...

Genetic algorithm to the bi-objective multiple travelling salesman problem

Through research, we found that using the TSP (Traveling Salesman Problem) algorithm to optimize logistics distribution routes can significantly reduce transportation costs, thereby achieving better transportation results. ... Zuo, M., et al.: Optimization of green fresh food logistics with heterogeneous fleet vehicle route problem by improved ...